Activation Functions for TensorFlow

Project description

ActTensor: Activation Functions for TensorFlow

What is it?

ActTensor is a Python package that provides state-of-the-art activation functions which facilitate using them in Deep Learning projects in an easy and fast manner.

Why not using tf.keras.activations?

As you may know, TensorFlow only has a few defined activation functions and most importantly it does not include newly-introduced activation functions. Wrting another one requires time and energy; however, this package has most of the widely-used, and even state-of-the-art activation functions that are ready to use in your models.

Requirements

numpy

tensorflow

setuptools

keras

wheel

Where to get it?

The source code is currently hosted on GitHub at: https://github.com/pouyaardehkhani/ActTensor

Binary installers for the latest released version are available at the Python Package Index (PyPI)

# PyPI

pip install ActTensor-tf

License

How to use?

import tensorflow as tf

import numpy as np

from ActTensor_tf import ReLU # name of the layer

functional api

inputs = tf.keras.layers.Input(shape=(28,28))

x = tf.keras.layers.Flatten()(inputs)

x = tf.keras.layers.Dense(128)(x)

# wanted class name

x = ReLU()(x)

output = tf.keras.layers.Dense(10,activation='softmax')(x)

model = tf.keras.models.Model(inputs = inputs,outputs=output)

sequential api

model = tf.keras.models.Sequential([tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128),

# wanted class name

ReLU(),

tf.keras.layers.Dense(10, activation = tf.nn.softmax)])

NOTE:

The main function of the activation layers are also availabe but it maybe defined as different name. Check this for more information.

from ActTensor_tf import relu

Activations

Classes and Functions are available in ActTensor_tf

Which activation functions it supports?

-

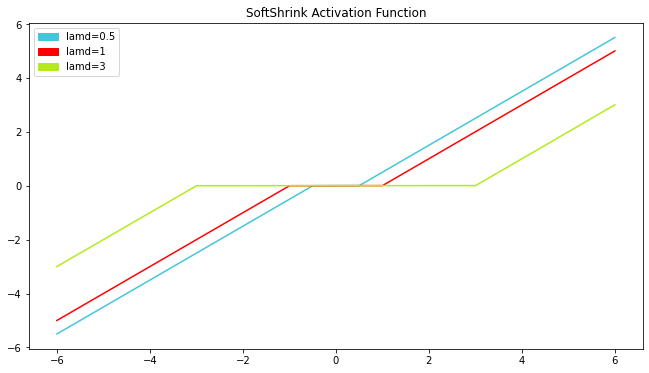

Soft Shrink:

-

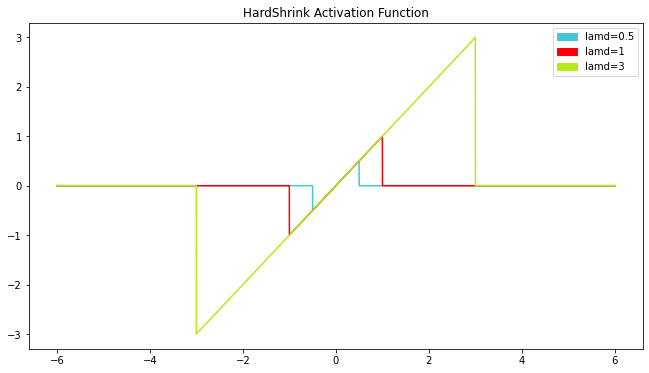

Hard Shrink:

-

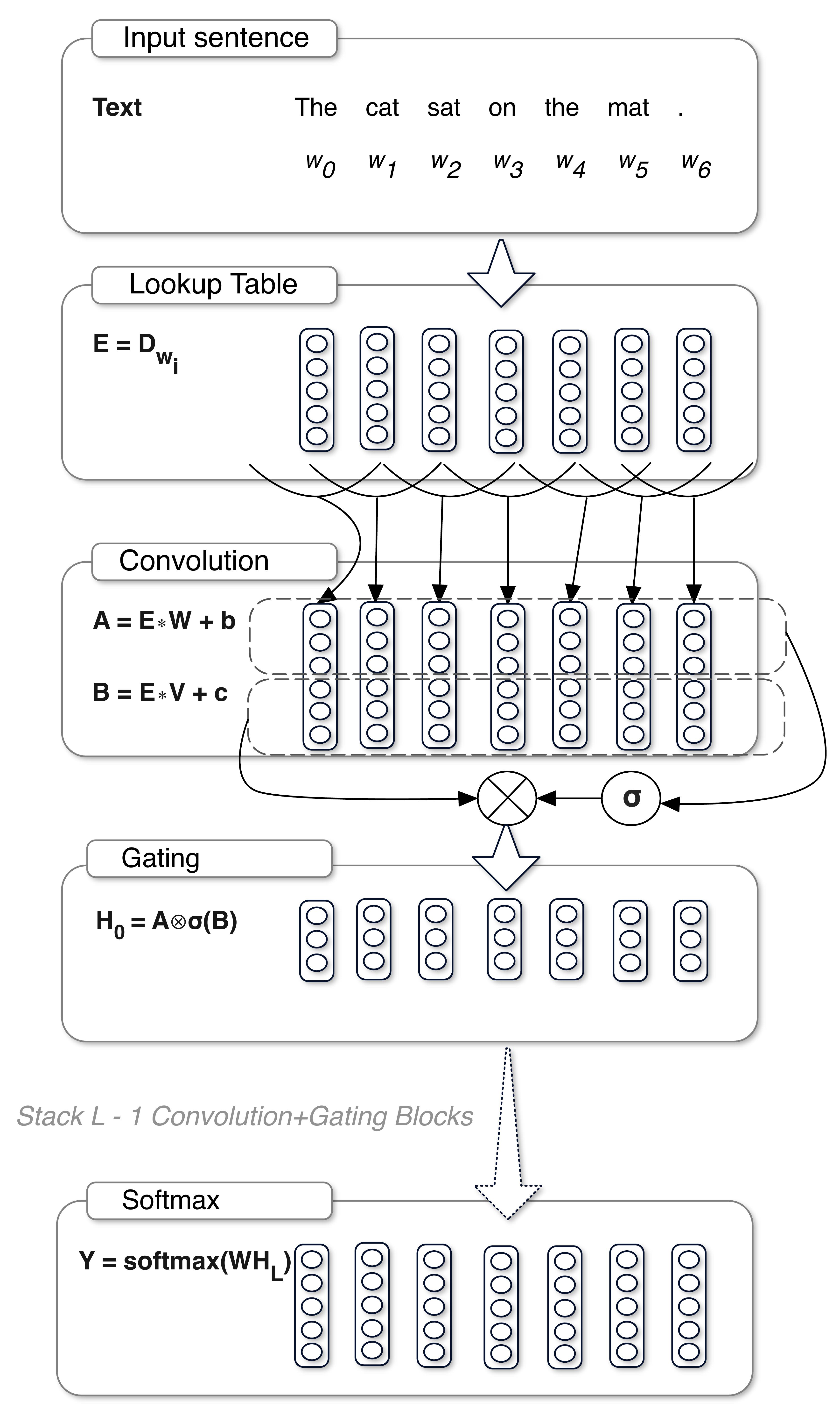

GLU:

- Bilinear:

-

ReGLU:

ReGLU is an activation function which is a variant of GLU.

-

GeGLU:

GeGLU is an activation function which is a variant of GLU.

-

SwiGLU:

SwiGLU is an activation function which is a variant of GLU.

-

SeGLU:

SeGLU is an activation function which is a variant of GLU.

-

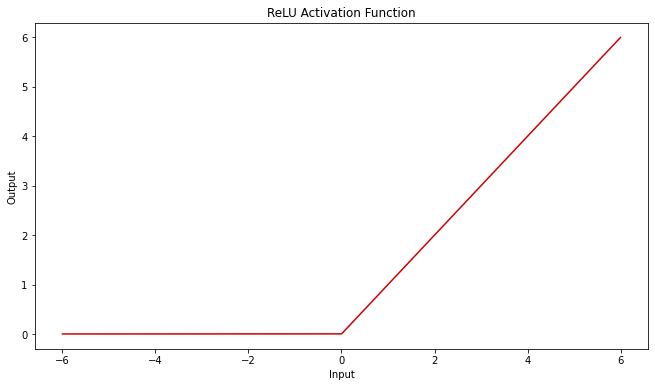

ReLU:

-

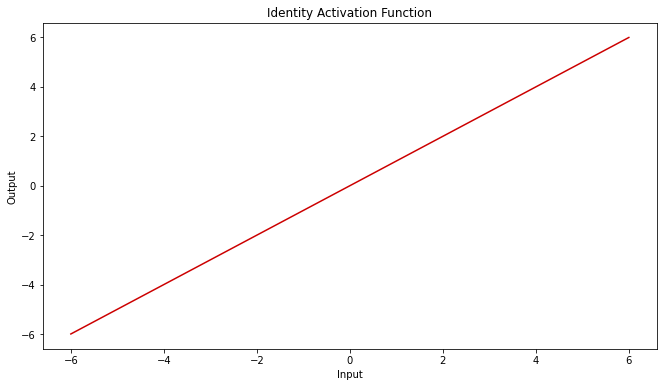

Identity:

$f(x) = x$

-

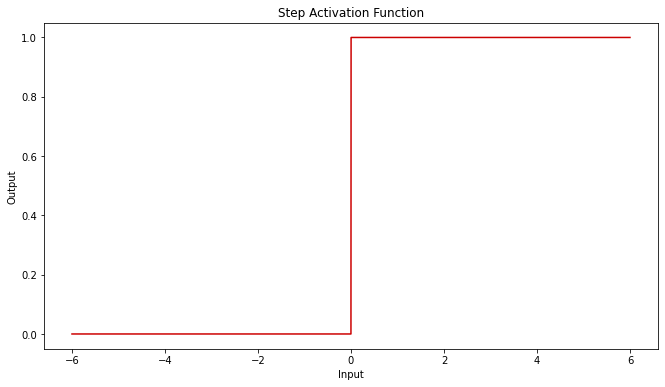

Step:

-

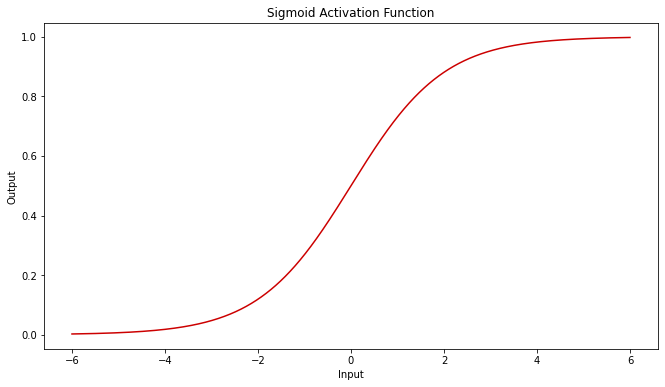

Sigmoid:

-

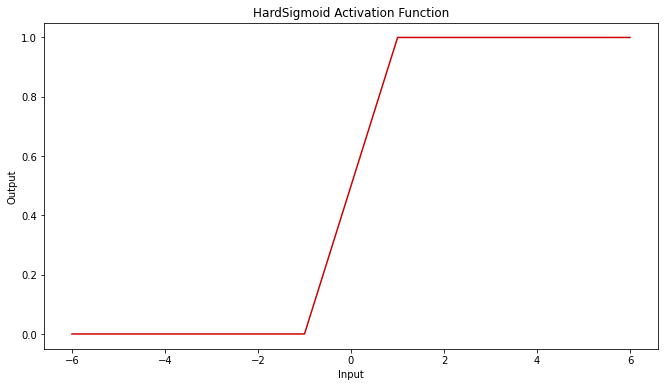

Hard Sigmoid:

-

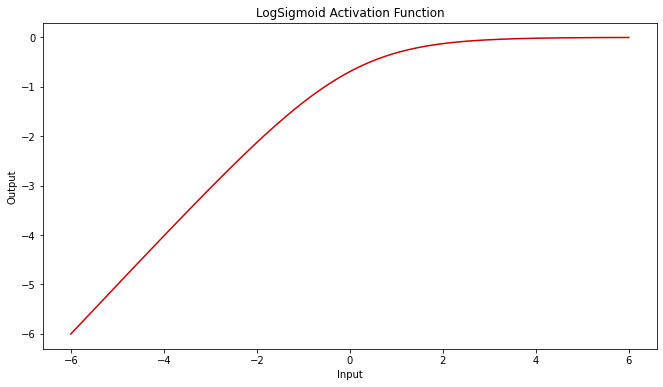

Log Sigmoid:

-

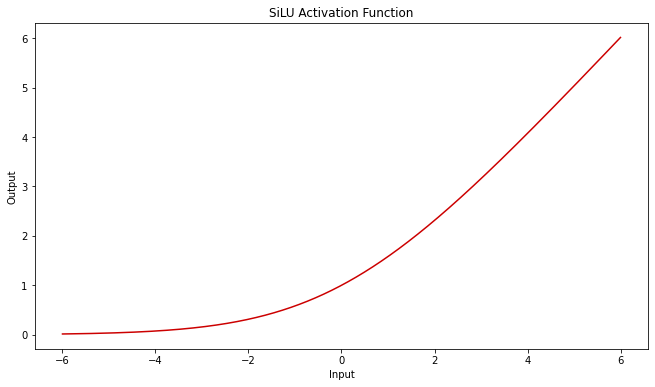

SiLU:

-

ParametricLinear:

$f(x) = a*x$

-

PiecewiseLinear:

Choose some xmin and xmax, which is our "range". Everything less than than this range will be 0, and everything greater than this range will be 1. Anything else is linearly-interpolated between.

-

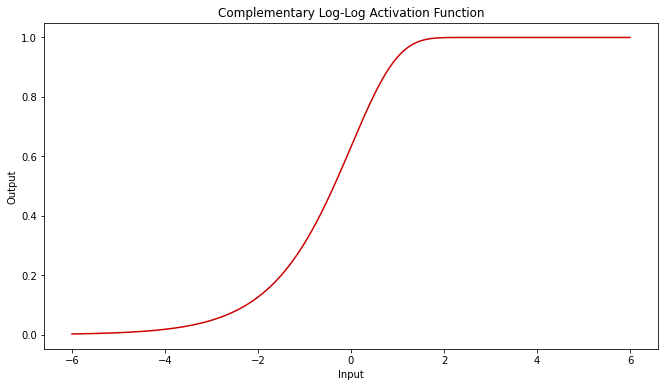

Complementary Log-Log (CLL):

-

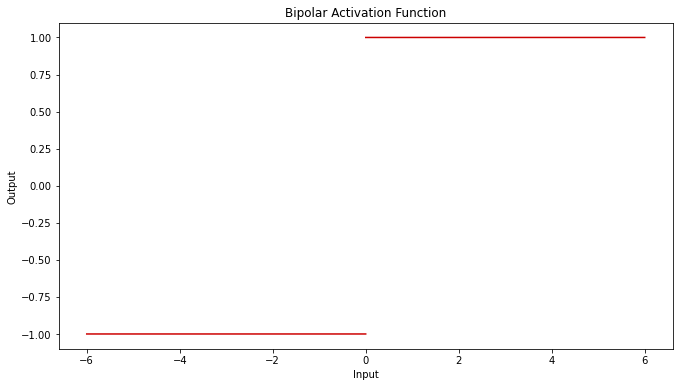

Bipolar:

-

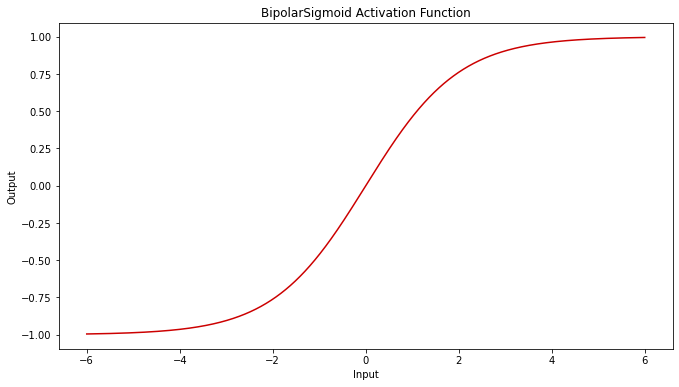

Bipolar Sigmoid:

-

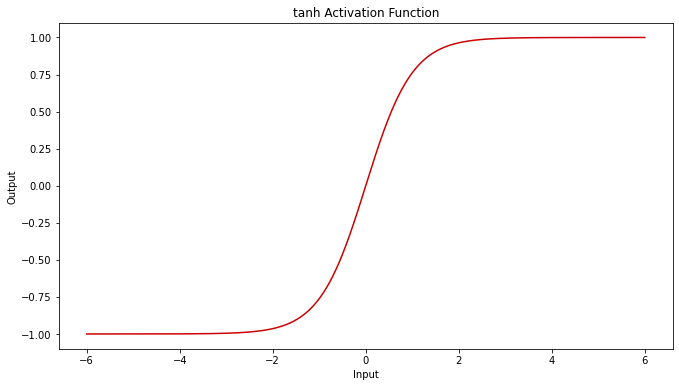

Tanh:

-

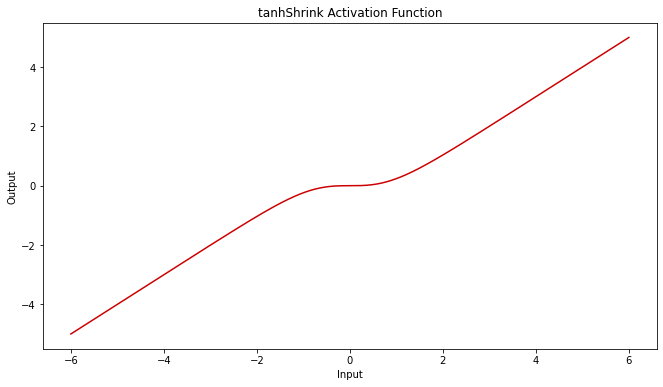

Tanh Shrink:

-

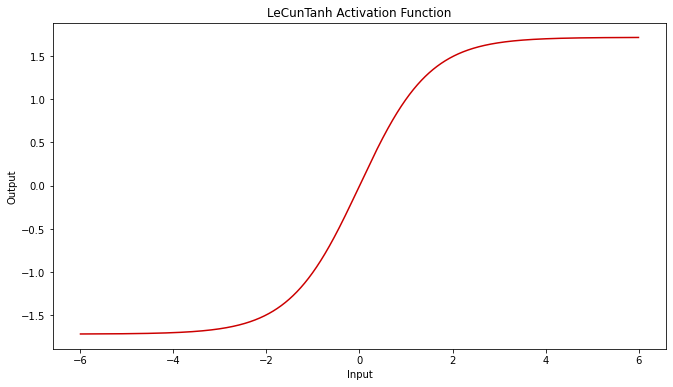

LeCunTanh:

-

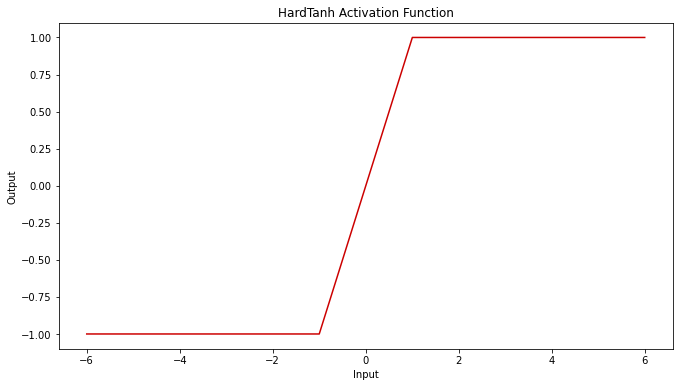

Hard Tanh:

-

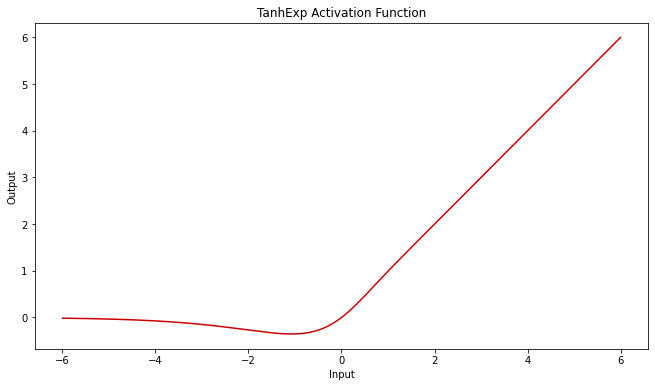

TanhExp:

-

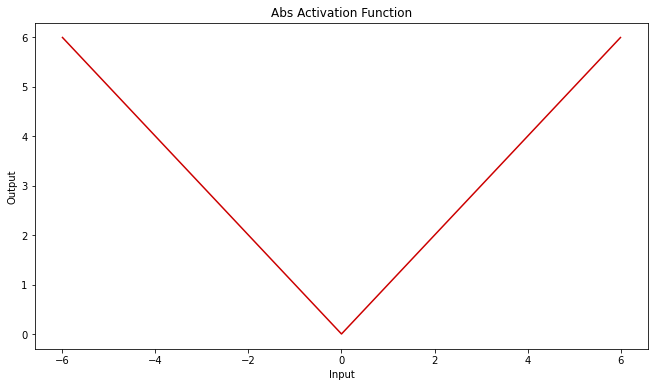

ABS:

-

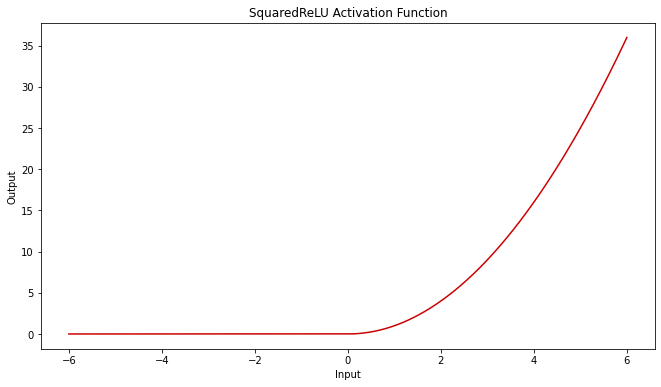

SquaredReLU:

-

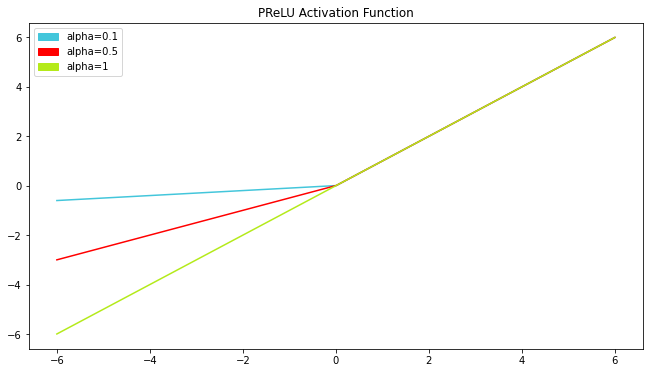

ParametricReLU (PReLU):

-

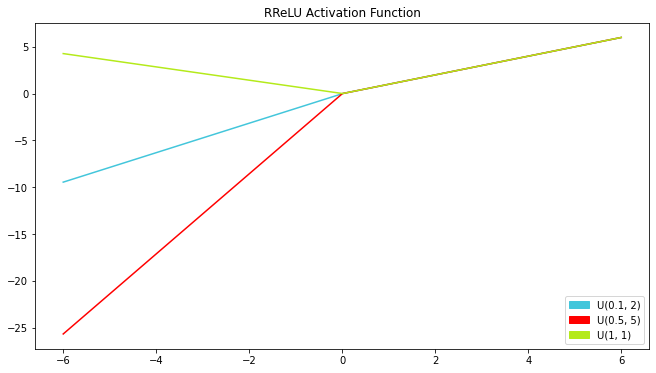

RandomizedReLU (RReLU):

-

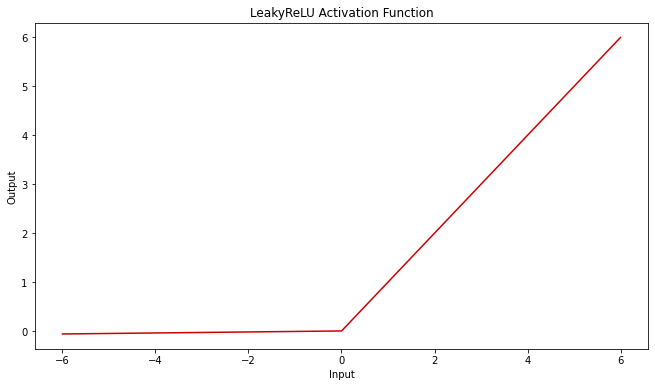

LeakyReLU:

-

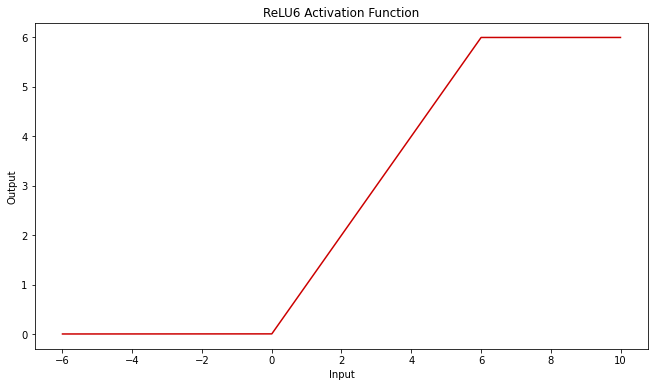

ReLU6:

-

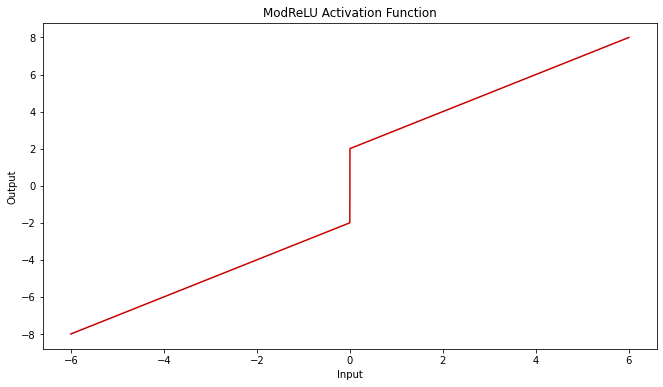

ModReLU:

-

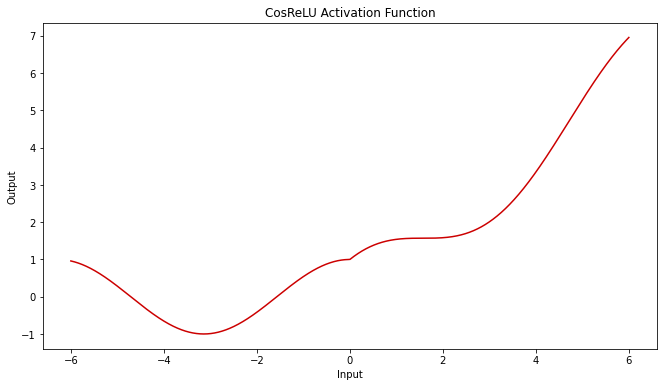

CosReLU:

-

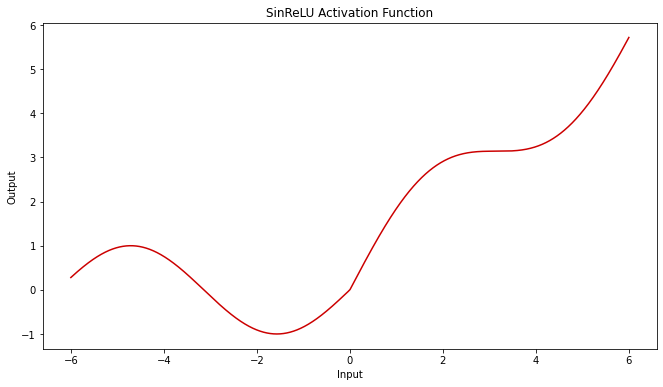

SinReLU:

-

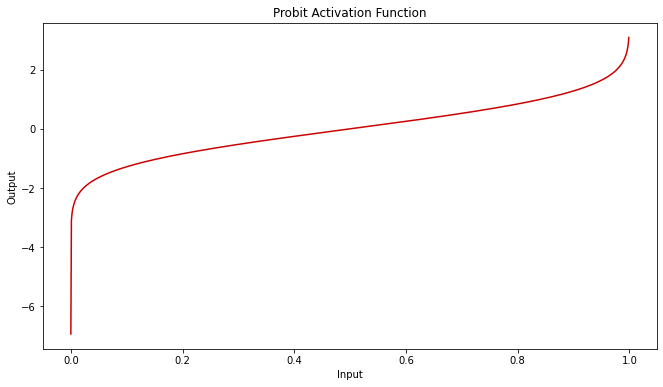

Probit:

-

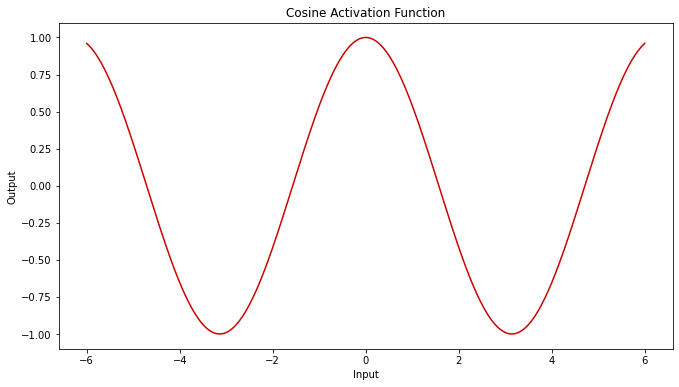

Cosine:

-

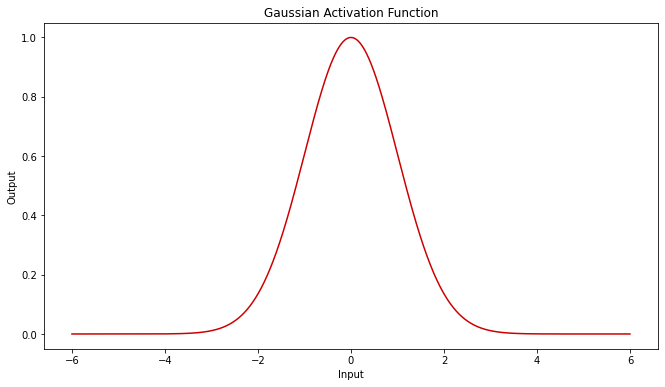

Gaussian:

-

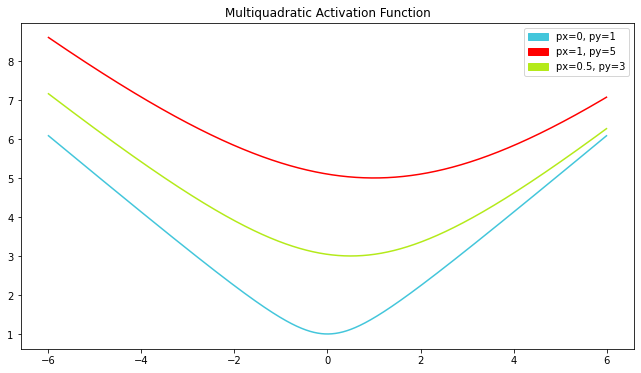

Multiquadratic:

Choose some point (x,y).

-

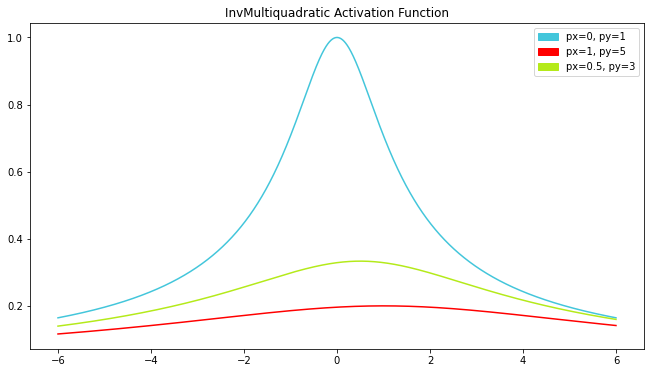

InvMultiquadratic:

-

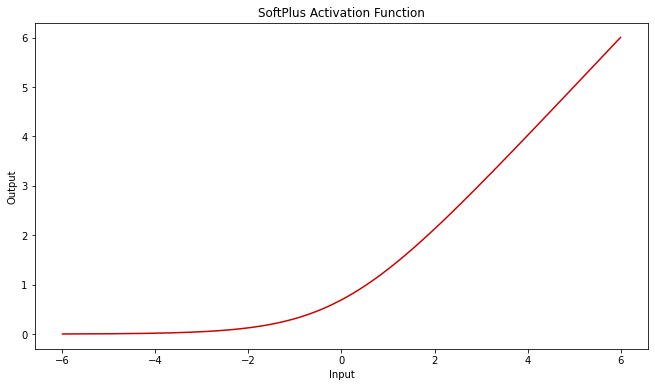

SoftPlus:

-

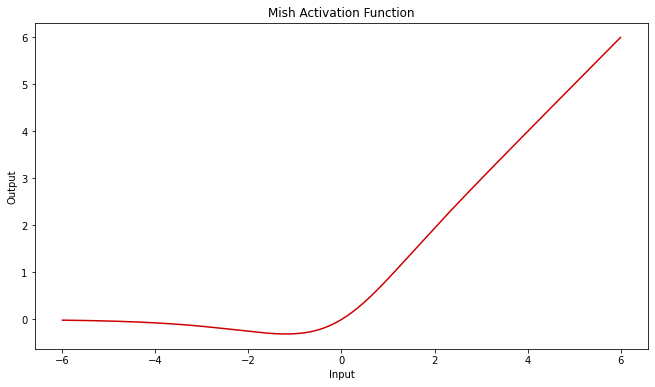

Mish:

-

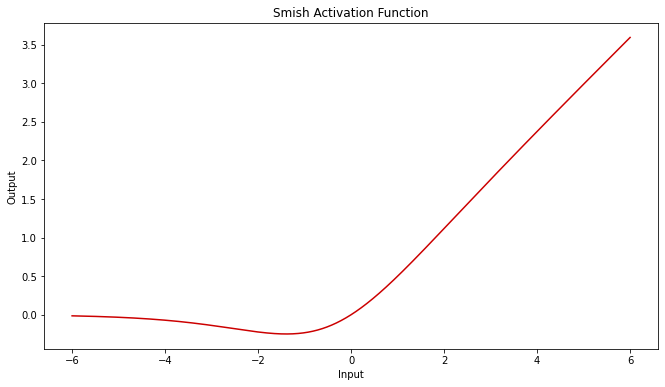

Smish:

-

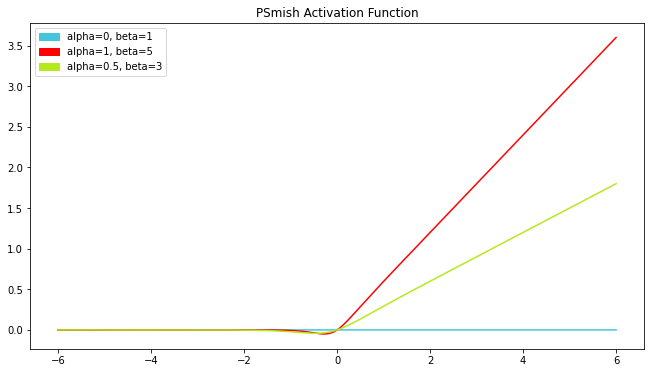

ParametricSmish (PSmish):

-

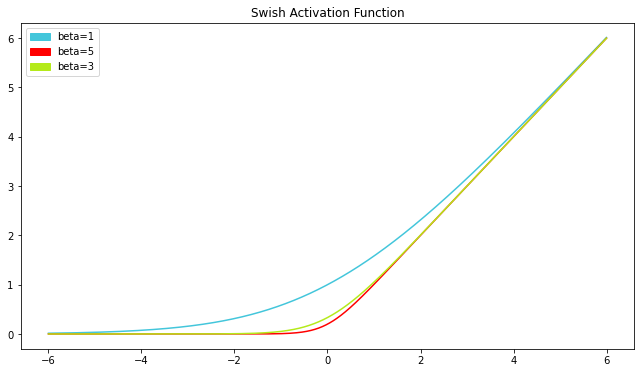

Swish:

-

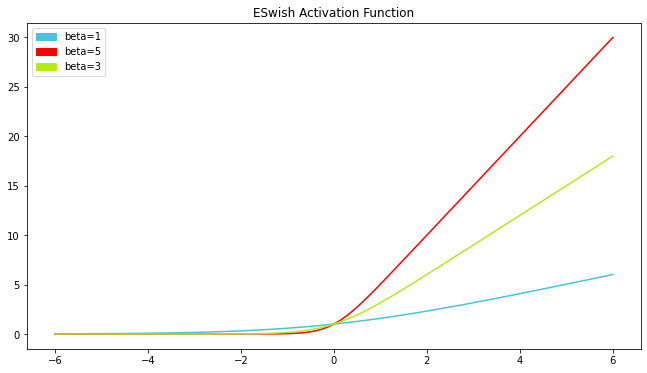

ESwish:

-

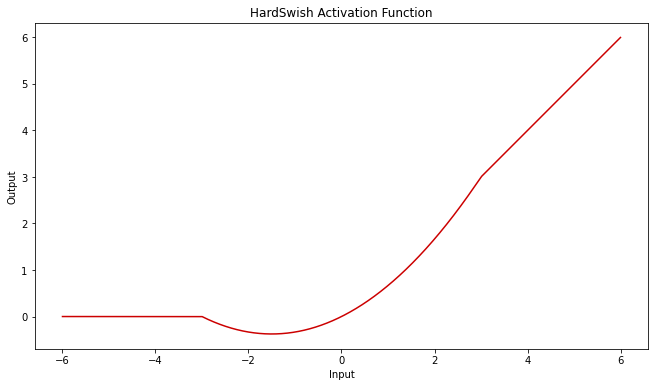

Hard Swish:

-

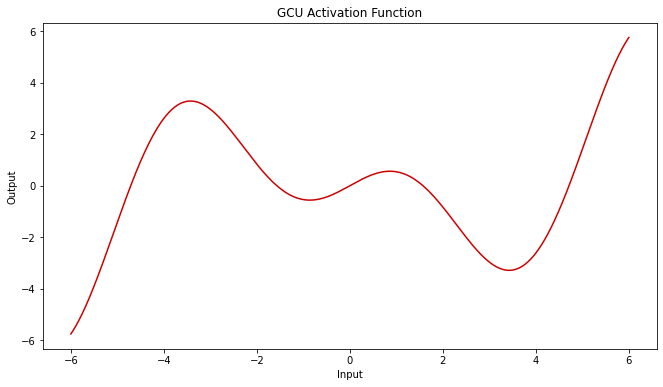

GCU:

-

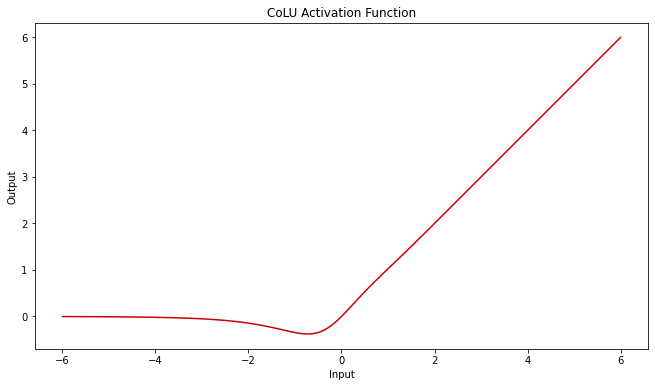

CoLU:

-

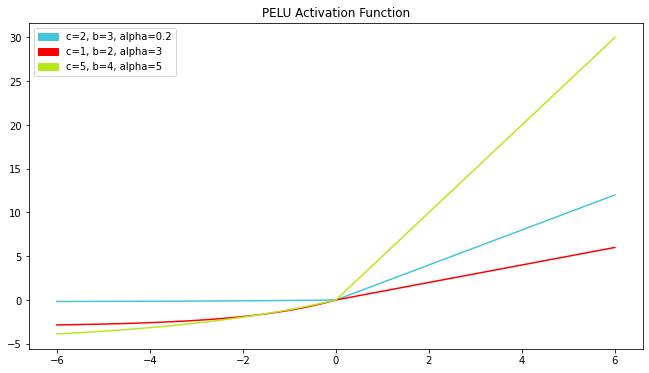

PELU:

-

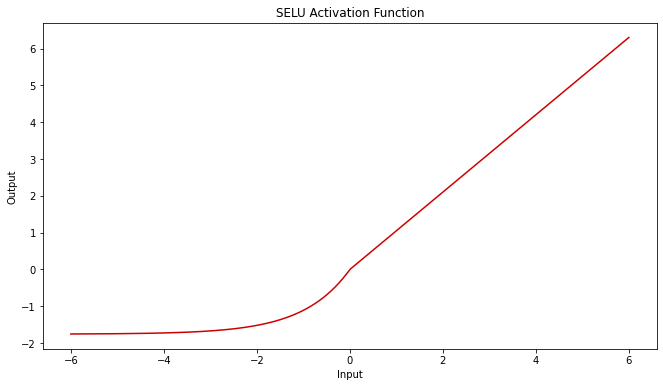

SELU:

where $\alpha \approx 1.6733$ & $\lambda \approx 1.0507$

-

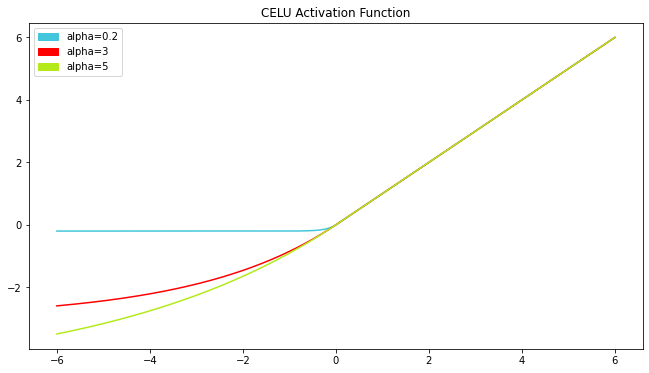

CELU:

-

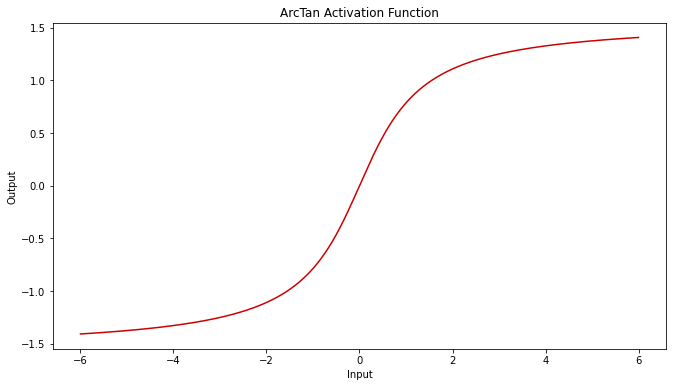

ArcTan:

-

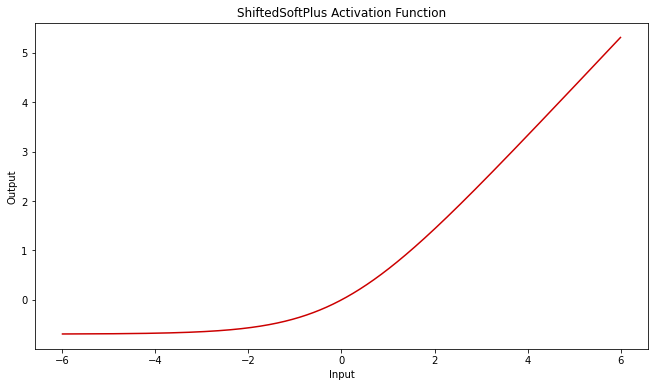

ShiftedSoftPlus:

-

Softmax:

-

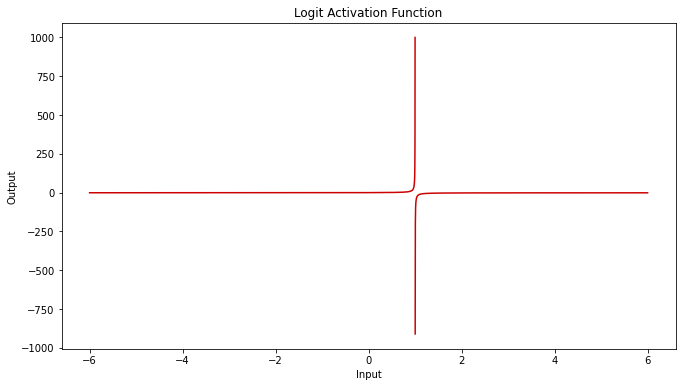

Logit:

-

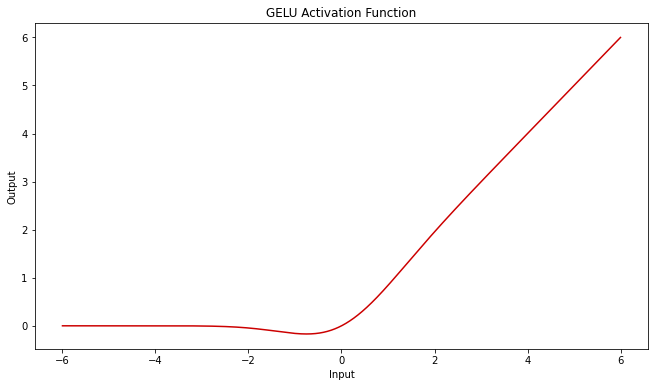

GELU:

-

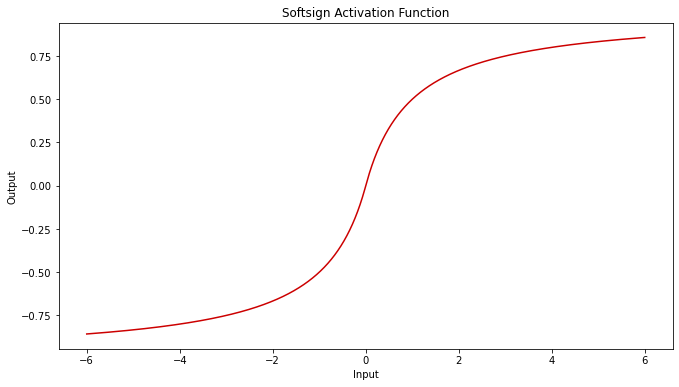

Softsign:

-

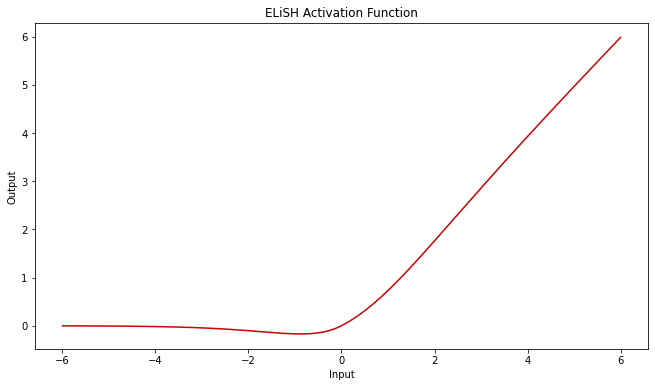

ELiSH:

-

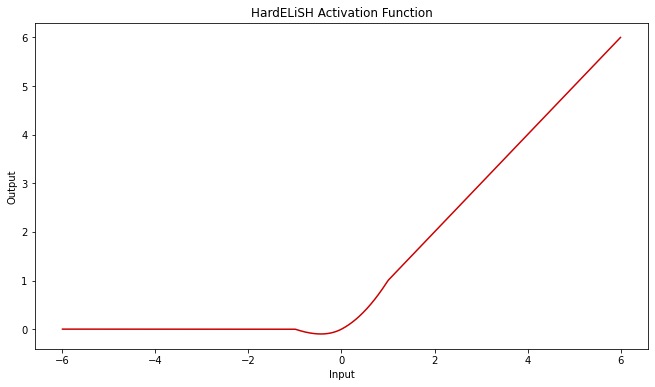

Hard ELiSH:

-

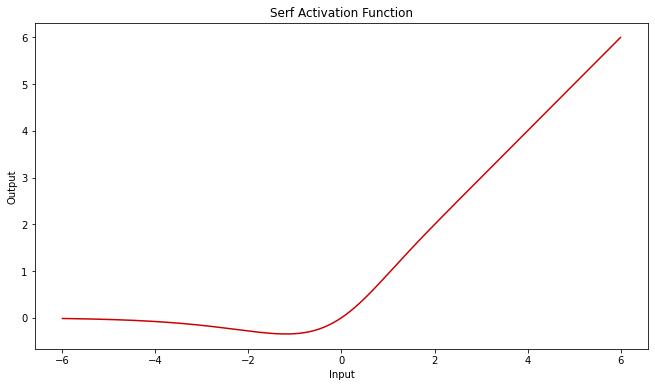

Serf:

-

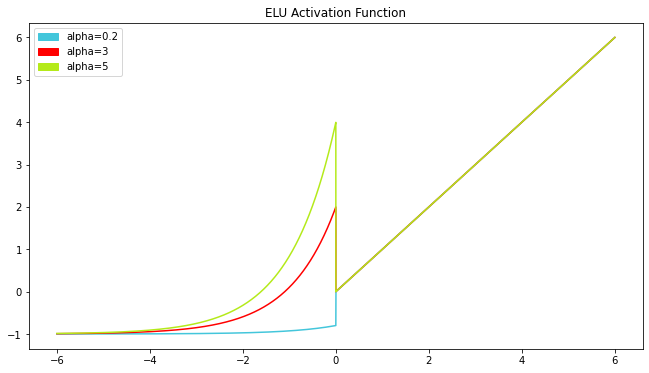

ELU:

-

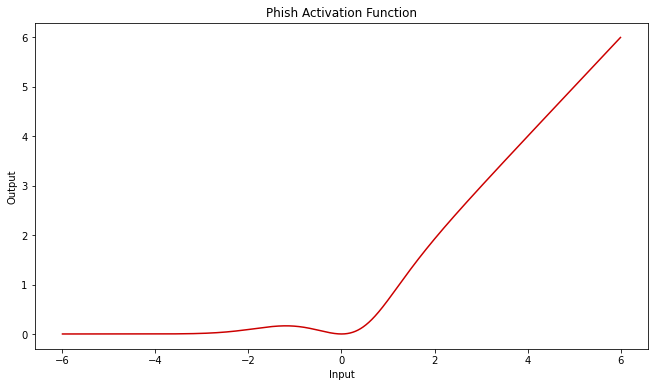

Phish:

-

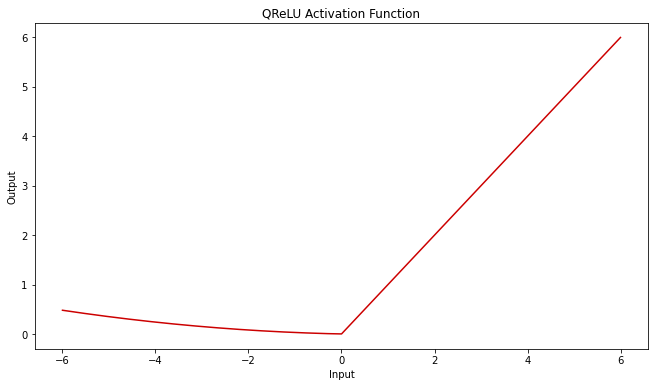

QReLU:

-

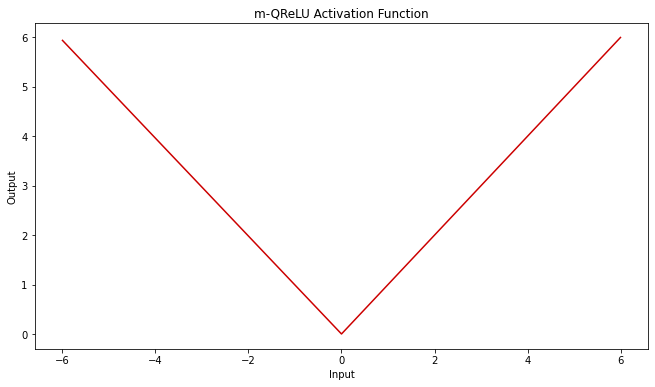

m-QReLU:

-

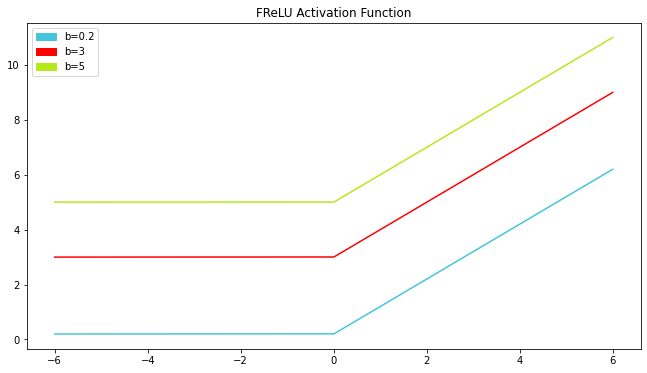

FReLU:

Cite this repository

@software{Pouya_ActTensor_2022,

author = {Pouya, Ardehkhani and Pegah, Ardehkhani},

license = {MIT},

month = {7},

title = {{ActTensor}},

url = {https://github.com/pouyaardehkhani/ActTensor},

version = {1.0.0},

year = {2022}

}

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.