KRATOS Multiphysics ("Kratos") is a framework for building parallel, multi-disciplinary simulation software, aiming at modularity, extensibility, and high performance. Kratos is written in C++, and counts with an extensive Python interface.

Project description

Multilevel Monte Carlo Application

MultilevelMonteCarloApplication provides different algorithms, belonging to the Monte Carlo (MC) family, to estimate statistics of scalar and field quantities of interest. The application is designed for running on distributed and high performance computing systems, exploiting both OpenMP and MPI parallel strategies. The application contains several interfaces with external libraries.

Getting started

This application is part of the Kratos Multiphysics Platform. Instructions on how to download, install and run the software in your local machine for development and testing purposes are available for both Linux and Windows systems.

Prerequisites

Build Kratos and make sure to have

add_app ${KRATOS_APP_DIR}/MultilevelMonteCarloApplication

in the compilation configuration, in order to compile the MultilevelMonteCarloApplication application.

Hierarchical Monte Carlo methods

- Repeatedly generate the random input and solve the associated deterministic problem.

- Convergence to the exact statistics as the number of realizations grows.

- Problem under consideration considered as a black-box.

- Convergence rate independent from stochastic space dimension.

Monte Carlo

- Monte Carlo (MC) is the reference method in the stochastic analysis of multiphysics problems with uncertainties in the data parameters.

- Levels of parallelism:

- Between samples,

- On each sample at solver level.

- Hierarchy update:

- Deterministic,

- Adaptive.

Multilevel Monte Carlo

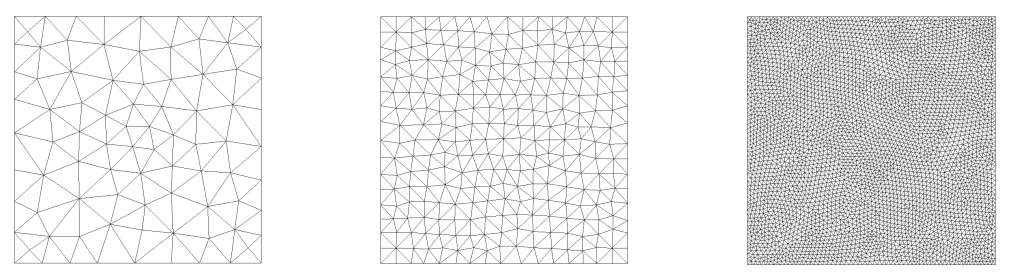

- Multilevel Monte Carlo (MLMC) requires a hierarchy of levels with increasing accuracy to solve the statistical problem. Convergence rate is faster with respect to MC if MLMC hypotheses are satisfied.

- Computation of a large number of cheap and lower accuracy realizations, while only few expensive high accuracy realizations are run.

- Low accuracy levels: capture statistical variability,

- High accuracy levels: capture discretization error.

- Example of hierarchy of computational grids, showing increasing accuracy levels (by decreasing mesh size):

- Levels of parallelism:

- Between levels,

- Between samples,

- On each sample at solver level.

- Hierarchy update:

- Deterministic,

- Adaptive.

Asynchronous Monte Carlo

- This algorithm is equivalent to MC, but designed for running in distributed environments. It avoids idle times and keeps at maximum the computational efficiency.

- Levels of parallelism:

- Between batches,

- Between samples,

- On each sample at solver level.

- Hierarchy update:

- Deterministic.

Asynchronous Multilevel Monte Carlo

- This algorithm is equivalent to MLMC, but designed for running in distributed environments. It avoids idle times and keeps at maximum the computational efficiency.

- Levels of parallelism:

- Between batches,

- Between levels,

- Between samples,

- On each sample at solver level.

- Hierarchy update:

- Deterministic.

Continuation Multilevel Monte Carlo

- A set of decreasing tolerances is used and updated on the fly to adaptively estimate the hierarchy and run MLMC.

- Levels of parallelism:

- Between levels,

- Between samples,

- On each sample at solver level.

- Hierarchy update:

- Adaptive.

Statistical tools

Power sums

- Update on the fly of power sums.

- A power sum of order p is defined as:

.

h-statistics

- The h-statistic of order p is the unbiased estimator with minimal variance of the central moment of order p.

- h-statistic dependencies are

.

Convergence criteria

- Convergence is achieved if the estimator of interest reaches a desired tolerance with respect to the true estimator with a given confidence.

- The failure probability to satisfy (for expected value and MC) is

- Other convergence criteria available:

- Mean square error,

- Sample variance criteria (MC only),

- Higher order (up to the fourth) moments criteria (MC only).

Hierarchy

-

Hierarchy strategies:

- stochastic adaptive refinement: hierarchy of levels built refining in space, performing solution-oriented adaptive space refinement. The coarsest mesh is shared between all realizations, and for each realization different meshes are generated, accordingly to the random variable. Requires compiling

MESHING_APPLICATION. - deterministic adaptive refinement: hierarchy of levels built refining in space, performing solution-oriented adaptive space refinement. All meshes are shared between all realizations, and adaptive refinement is done at pre-process, exploiting a user-defined random variable. Requires compiling

MESHING_APPLICATION. - standard: the user takes care of building the hierarchy, using the strategy he prefers (such as uniform refinement).

- stochastic adaptive refinement: hierarchy of levels built refining in space, performing solution-oriented adaptive space refinement. The coarsest mesh is shared between all realizations, and for each realization different meshes are generated, accordingly to the random variable. Requires compiling

-

Metric strategies:

- geometric error estimate: the analysis of the hessian of the numerical solution controls the mesh refinement.

- divergence-free error estimate: the analysis of the mass conservation controls the mesh refinement (suitable only for CFD cases). Requires compiling

EXAQUTE_SANDBOX_APPLICATION. In progress.

External Libraries

MultilevelMonteCarloApplication makes use of third part libraries. Information about these libraries can be found in their respective pages, which are listed below.

XMC

XMC [3] is a Python library, with BSD 4 license, designed for hierarchical Monte Carlo methods. The library develops the above-mentioned algorithms, statistical tools and convergence criteria. The library presents a natural integration with Kratos, which is XMC default solver. By default, an internal version of the library is used. If one wants to use an external version of the library, the environment variable XMC_BACKEND=external should be set.

PyCOMPSs

PyCOMPSs is the Python library required in order to use task-based programming software COMPSs in a Python environment.

By default PyCOMPSs is not required in order to run the application.

In case one wants to run using this library, the environment variable EXAQUTE_BACKEND=pycompss must be set.

The current version is able to run several thousands of samples at once exploiting PyCOMPSs in distributed systems, maximizing parallelism and computational efficiency. Optimal scalability up to 128 working nodes (6144 CPUs) has been demonstrated with both OpenMP and MPI parallelisms.

Instructions for the installation can be found in the Kratos wiki.

To run with runcompss, the environment variable EXAQUTE_BACKEND=pycompss must be set to use the distributed computing capabilities.

Additionally, running with runcompss requires to add to the PYTHONPATH the path of the XMC library, that is /path/to/Kratos/applications/MultilevelMonteCarloApplication/external_libraries/XMC. You can add the library to the PYTHONPATH either in the .bashrc file, or directly when running the code using the runcompss key --pythonpath. We refer to the Kratos wiki for details.

Mmg and ParMmg

Mmg is an open source software for simplicial remeshing. It provides 3 applications and 4 libraries. Instructions for installing Mmg can be found in the Kratos wiki. ParMmg is the MPI parallel version of the remeshing library Mmg. Instructions for installing ParMmg can be found in the Kratos wiki.

Scalability

Examples

Many examples can be found in the Kratos Multiphysics Examples repository.

License

The MultilevelMonteCarloApplication is OPEN SOURCE. The main code and program structure are available and aimed to grow with the need of any user willing to expand it. The BSD (Berkeley Software Distribution) licence allows to use and distribute the existing code without any restriction, but with the possibility to develop new parts of the code on an open or close basis depending on the developers.

Main References

[1] Tosi, R., Amela, R., Badia, R. M. & Rossi, R. (2021). A Parallel Dynamic Asynchronous Framework for Uncertainty Quantification by Hierarchical Monte Carlo Algorithms. Journal of Scientific Computing. https://doi.org/10.1007/s10915-021-01598-6

[2] Tosi, R., Núñez, M., Pons-Prats, J., Principe, J. & Rossi, R. (2022). On the use of ensemble averaging techniques to accelerate the Uncertainty Quantification of CFD predictions in wind engineering. Journal of Wind Engineering and Industrial Aerodynamics. https://doi.org/10.1016/j.jweia.2022.105105

[3] Amela, R., Ayoul-Guilmard, Q., Badia, R. M., Ganesh, S., Nobile, F., Rossi, R., & Tosi, R. (2019). ExaQUte XMC. https://doi.org/10.5281/zenodo.3235833

[4] Ejarque, J., Böhm, S., Tosi, R., Núñez, M., & Badia, R. M. (2021). D4.5 Framework development and release. ExaQUte consortium.

Contact

- Riccardo Rossi - Group Leader - rrossi@cimne.upc.edu

- Riccardo Tosi - Developer - rtosi@cimne.upc.edu

- Marc Núñez - Developer - mnunez@cimne.upc.edu

- Ramon Amela - Developer - ramon.amela@bsc.es

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distributions

Built Distributions

Hashes for KratosMultilevelMonteCarloApplication-9.3.2-cp311-cp311-win_amd64.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | d375859fc552682952859f1f4f4aeced46f47dd69f219d155940bd52416f6e99 |

|

| MD5 | 015dac9aab1744ce332667fe662623db |

|

| BLAKE2b-256 | 145c51196b570da32396f5171d3c50f68452f67ee7e04e3309ba0ed5ea90b50b |

Hashes for KratosMultilevelMonteCarloApplication-9.3.2-cp311-cp311-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 5748e4dc581de2982a524ab2383519b305d7c4f0cbe2e84735701289888e3f22 |

|

| MD5 | c477c1676d1ed7f49399e934bacd72c4 |

|

| BLAKE2b-256 | 5ef70b6b2b284a8d6b1079224cebeffdb638f33f76e0838982c19ad341a42951 |

Hashes for KratosMultilevelMonteCarloApplication-9.3.2-cp310-cp310-win_amd64.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 79ecd5da08ca3fd4096d0103be5b5a46b5eaf137c7649af74bb990c4b0ed9011 |

|

| MD5 | e339912b6ad21b4b26bd3eae00d53b2c |

|

| BLAKE2b-256 | e5a92dfba45c96a9f595f616b74eb0f7b5edf3ea83de79cd5e9bffc2a5237904 |

Hashes for KratosMultilevelMonteCarloApplication-9.3.2-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 5b772f96d419d3c2188e8bed4f952fce139a641e563155a45c24d0fa026ffdc8 |

|

| MD5 | fd6531f83570d4d8fccec8636b393c3b |

|

| BLAKE2b-256 | 643b9727e3187660f8a86d7349f0592aabdc09935ec782d67e2f59e2ff5582bf |

Hashes for KratosMultilevelMonteCarloApplication-9.3.2-cp39-cp39-win_amd64.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 8ebb725d8e4f8918066776a329c3c38fd1ba840c58c35887b6f573407cf369b2 |

|

| MD5 | 76ccd9f186e3d22769a9c3c10fcf94e6 |

|

| BLAKE2b-256 | d047a7acb57a0947a5e3d24ddac44c3f8e6f29a58a83c8585251ce64ed319a3a |

Hashes for KratosMultilevelMonteCarloApplication-9.3.2-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | da683ea70d6c0f044a1cf557cde08f839f88bdbbf6aac5b6e5fead70b0a33a3d |

|

| MD5 | 25e900ae72106c03c08752b6f91be655 |

|

| BLAKE2b-256 | 02520a08173b7524bbd8db67e4b3d2168d2627f135c8c7937d810641e73ddf69 |

Hashes for KratosMultilevelMonteCarloApplication-9.3.2-cp38-cp38-win_amd64.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 5887a9345e10f3652af6eed0652a81b7ad4f2f7110b0c33593078a246316f11c |

|

| MD5 | 22b95c0ffe01ddc618d6aa0213f4fe10 |

|

| BLAKE2b-256 | 318f3e77238be983d0de3023503db7145fb3ef0ea2d592375f56a3309345e224 |

Hashes for KratosMultilevelMonteCarloApplication-9.3.2-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 281291a9a49c7386161b58cd104f1c830c87bb38b3f2b829874b0002b0fd9da1 |

|

| MD5 | 22faf52e790d21382ecbc49c77a0b5f5 |

|

| BLAKE2b-256 | 8cbc2bfc97b72695c234cc2f07f3ca93e6fbbe73621dd453031c0eb52ff6a168 |