No project description provided

Project description

dask-databricks

Cluster tools for running Dask on Databricks multi-node clusters.

Quickstart

To launch a Dask cluster on Databricks you need to create an init script with the following contents and configure your multi-node cluster to use it.

#!/bin/bash

# Install Dask + Dask Databricks

/databricks/python/bin/pip install --upgrade dask[complete] git+https://github.com/jacobtomlinson/dask-databricks.git@main

# Start Dask cluster components

dask databricks run

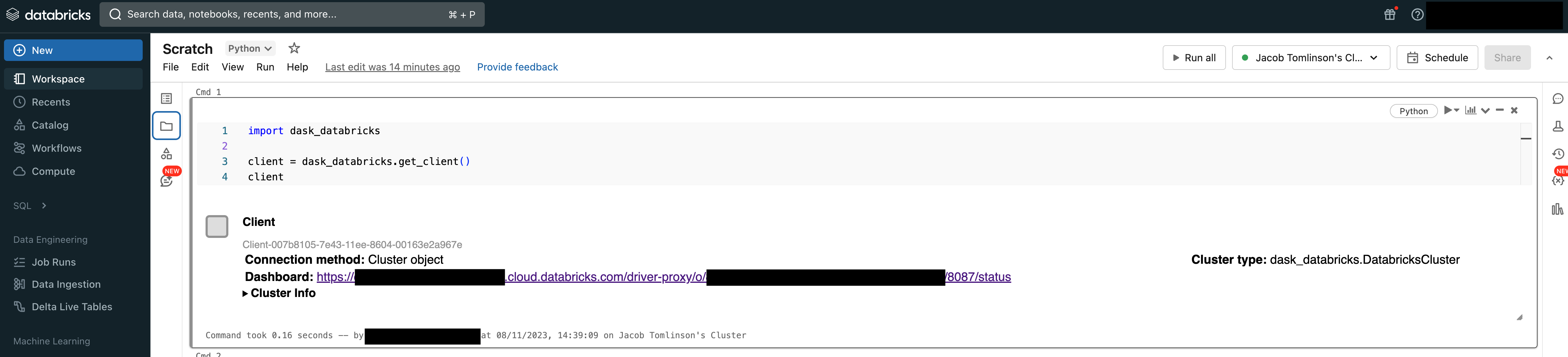

Then from your Databricks Notebook you can quickly connect a Dask Client to the scheduler running on the Spark Driver Node.

import dask_databricks

client = dask_databricks.get_client()

Now you can submit work from your notebook to the multi-node Dask cluster.

def inc(x):

return x + 1

x = client.submit(inc, 10)

x.result()

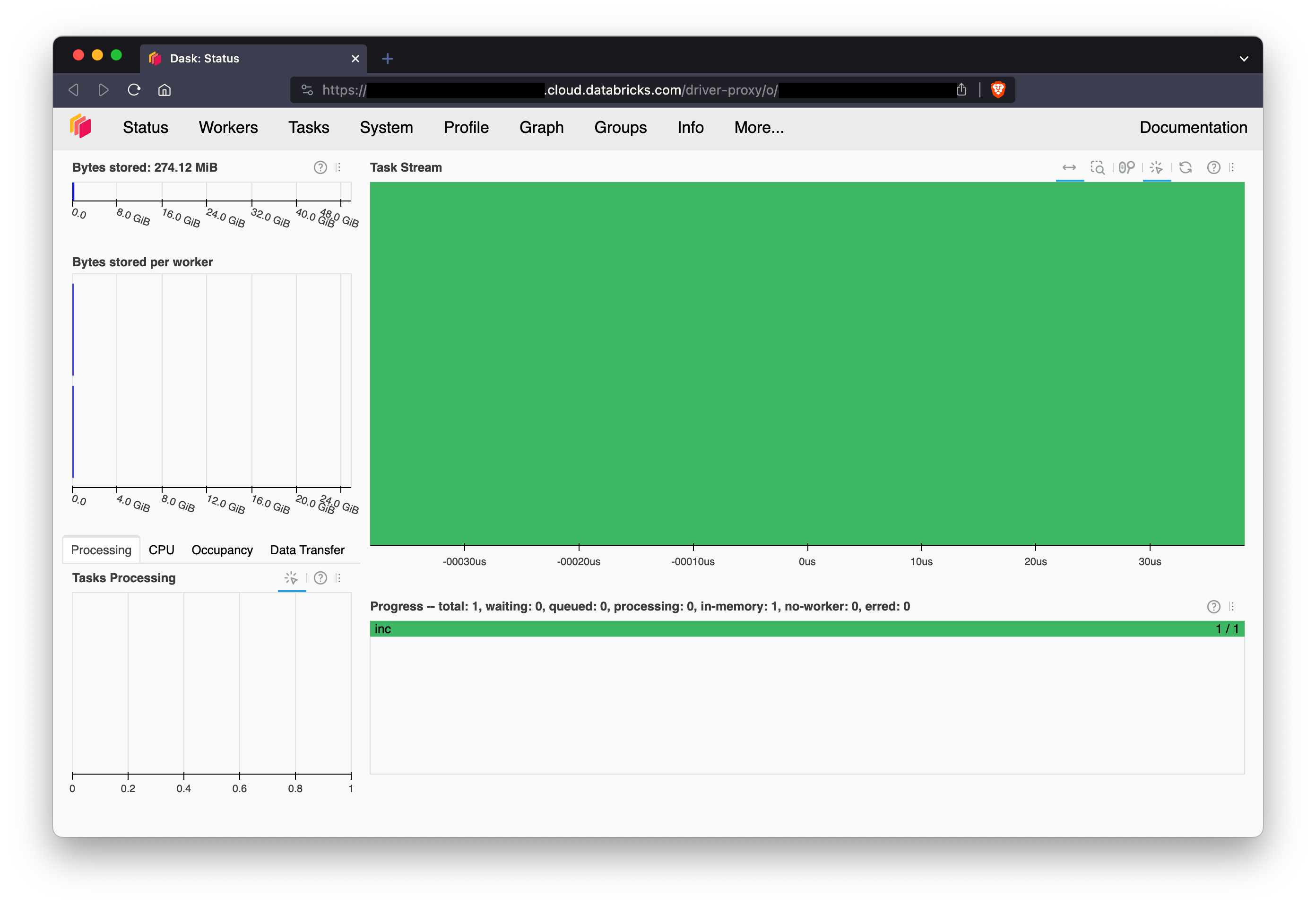

Dashboard

You can access the Dask dashboard via the Databricks driver-node proxy. The link can be found in Client or DatabricksCluster repr or via client.dashboard_link.

>>> print(client.dashboard_link)

https://dbc-dp-xxxx.cloud.databricks.com/driver-proxy/o/xxxx/xx-xxx-xxxx/8087/status

Project details

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

dask_databricks-0.1.0.tar.gz

(8.4 kB

view hashes)

Built Distribution

Close

Hashes for dask_databricks-0.1.0-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | cf6f50521ba33f029532c4441abe7ccd585ae325c68f54d0b599063a38ce739f |

|

| MD5 | b2675d8f44baa341b0c10a6999343eb0 |

|

| BLAKE2b-256 | 7d4ecbb0b6dd7265e69e07743c5c560b061a931b4e95074145bcef02b870da5b |