No project description provided

Project description

Keras Image Models

Introduction

Keras Image Models (kimm) is a collection of image models, blocks and layers written in Keras 3. The goal is to offer SOTA models with pretrained weights in a user-friendly manner.

kimm is:

-

🚀 A model zoo where almost all models come with pre-trained weights on ImageNet.

Note: The accuracy of the converted models can be found at results-imagenet.csv (timm) and https://keras.io/api/applications/ (keras), and the numerical differences of the converted models can be verified in

tools/convert_*.py -

✨ Exposing a common API identical to offcial

keras.applications.*.model = kimm.models.RegNetY002( input_tensor: keras.KerasTensor = None, input_shape: typing.Optional[typing.Sequence[int]] = None, include_preprocessing: bool = True, include_top: bool = True, pooling: typing.Optional[str] = None, dropout_rate: float = 0.0, classes: int = 1000, classifier_activation: str = "softmax", weights: typing.Optional[str] = "imagenet", name: str = "RegNetY002", )

-

🔥 Integrated with feature extraction capability.

from keras import random import kimm model = kimm.models.ConvNeXtAtto(feature_extractor=True) x = random.uniform([1, 224, 224, 3]) y = model(x, training=False) # y becomes a dict for k, v in y.items(): print(k, v.shape)

-

🧰 Providing APIs to export models to

.tfliteand.onnx.# in tensorflow backend from keras import backend import kimm backend.set_image_data_format("channels_last") model = kimm.models.MobileNet050V3Small() kimm.export.export_tflite(model, [224, 224, 3], "model.tflite")

# in torch backend from keras import backend import kimm backend.set_image_data_format("channels_first") model = kimm.models.MobileNet050V3Small() kimm.export.export_onnx(model, [3, 224, 224], "model.onnx")

Installation

pip install keras kimm

Quickstart

Image Classification Using the Model Pretrained on ImageNet

import keras

from keras import ops

from keras import utils

from keras.applications.imagenet_utils import decode_predictions

import kimm

# Use `kimm.list_models` to get the list of available models

print(kimm.list_models())

# Specify the name and other arguments to filter the result

print(kimm.list_models("vision_transformer", weights="imagenet")) # fuzzy search

# Initialize the model with pretrained weights

model = kimm.models.VisionTransformerTiny16()

image_size = (model._default_size, model._default_size)

# Load an image as the model input

image_path = keras.utils.get_file(

"african_elephant.jpg", "https://i.imgur.com/Bvro0YD.png"

)

image = utils.load_img(image_path, target_size=image_size)

image = utils.img_to_array(image)

x = ops.convert_to_tensor(image)

x = ops.expand_dims(x, axis=0)

# Predict

preds = model.predict(x)

print("Predicted:", decode_predictions(preds, top=3)[0])

['ConvMixer1024D20', 'ConvMixer1536D20', 'ConvMixer736D32', 'ConvNeXtAtto', ...]

['VisionTransformerBase16', 'VisionTransformerBase32', 'VisionTransformerSmall16', ...]

1/1 ━━━━━━━━━━━━━━━━━━━━ 1s 1s/step

Predicted: [('n02504458', 'African_elephant', 0.6895825), ('n01871265', 'tusker', 0.17934209), ('n02504013', 'Indian_elephant', 0.12927249)]

An end-to-end example: fine-tuning an image classification model on a cats vs. dogs dataset

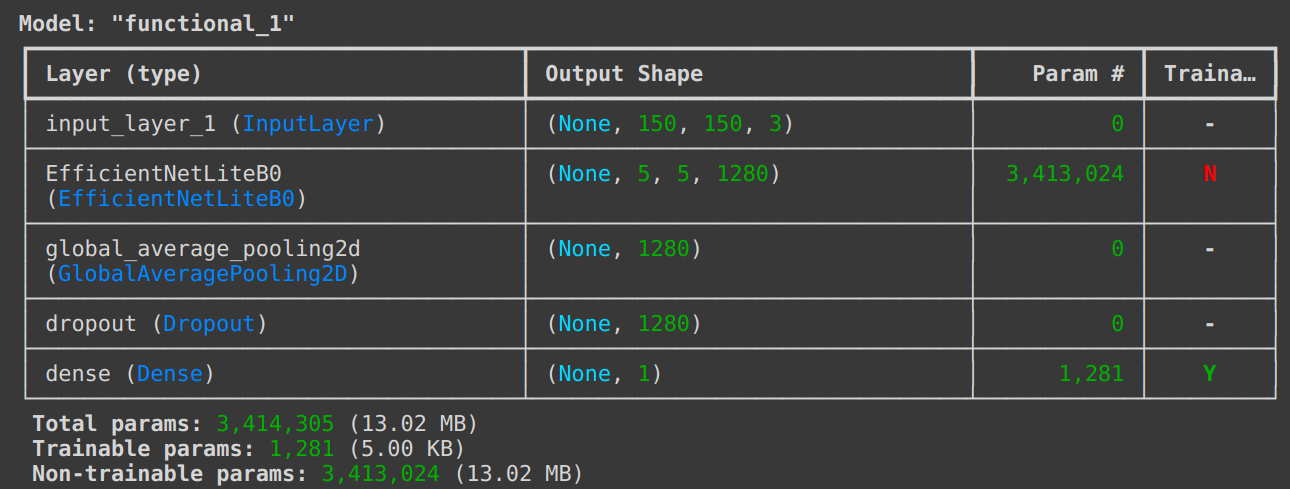

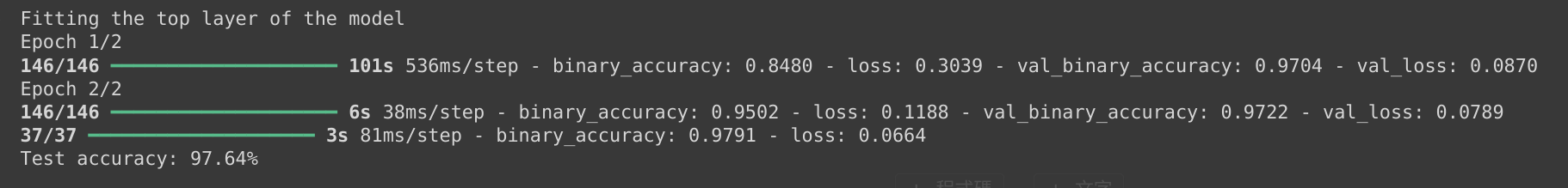

Using kimm.models.EfficientNetLiteB0:

Reference: Transfer learning & fine-tuning (keras.io)

Grad-CAM

Using kimm.models.MobileViTS:

Reference: Grad-CAM class activation visualization (keras.io)

Model Zoo

| Model | Paper | Weights are ported from | API |

|---|---|---|---|

| ConvMixer | ICLR 2022 Submission | timm |

kimm.models.ConvMixer* |

| ConvNeXt | CVPR 2022 | timm |

kimm.models.ConvNeXt* |

| DenseNet | CVPR 2017 | timm |

kimm.models.DenseNet* |

| EfficientNet | ICML 2019 | timm |

kimm.models.EfficientNet* |

| EfficientNetLite | ICML 2019 | timm |

kimm.models.EfficientNetLite* |

| EfficientNetV2 | ICML 2021 | timm |

kimm.models.EfficientNetV2* |

| GhostNet | CVPR 2020 | timm |

kimm.models.GhostNet* |

| GhostNetV2 | NeurIPS 2022 | timm |

kimm.models.GhostNetV2* |

| InceptionV3 | CVPR 2016 | timm |

kimm.models.InceptionV3 |

| LCNet | arXiv 2021 | timm |

kimm.models.LCNet* |

| MobileNetV2 | CVPR 2018 | timm |

kimm.models.MobileNetV2* |

| MobileNetV3 | ICCV 2019 | timm |

kimm.models.MobileNetV3* |

| MobileViT | ICLR 2022 | timm |

kimm.models.MobileViT* |

| RegNet | CVPR 2020 | timm |

kimm.models.RegNet* |

| ResNet | CVPR 2015 | timm |

kimm.models.ResNet* |

| TinyNet | NeurIPS 2020 | timm |

kimm.models.TinyNet* |

| VGG | ICLR 2015 | timm |

kimm.models.VGG* |

| ViT | ICLR 2021 | timm |

kimm.models.VisionTransformer* |

| Xception | CVPR 2017 | keras |

kimm.models.Xception |

The export scripts can be found in tools/convert_*.py.

License

Please refer to timm as this project is built upon it.

kimm Code

The code here is licensed Apache 2.0.

Acknowledgements

Thanks for these awesome projects that were used in kimm

Citing

BibTeX

@misc{rw2019timm,

author = {Ross Wightman},

title = {PyTorch Image Models},

year = {2019},

publisher = {GitHub},

journal = {GitHub repository},

doi = {10.5281/zenodo.4414861},

howpublished = {\url{https://github.com/rwightman/pytorch-image-models}}

}

@misc{hy2024kimm,

author = {Hongyu Chiu},

title = {Keras Image Models},

year = {2024},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/james77777778/kimm}}

}

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.