The most pythonic LLM application building experience

Project description

Prompt Engineering focused on:

| Simplicity through idiomatic syntax | → | Faster and more reliable releases |

| Semi-opinionated methods | → | Reduced complexity that speeds up development |

| Reliability through validation | → | More robust applications with fewer bugs |

| High-quality up-to-date documentation | → | Trust and reliability |

Documentation: https://docs.mirascope.io

Source Code: https://github.com/Mirascope/mirascope

Mirascope is a library purpose-built for Prompt Engineering on top of Pydantic 2.0:

- Prompts can live as self-contained classes in their own directory:

# prompts/book_recommendation.py

from mirascope import Prompt

class BookRecommendationPrompt(Prompt):

"""

I've recently read the following books: {titles_in_quotes}.

What should I read next?

"""

book_titles: list[str]

@property

def titles_in_quotes(self) -> str:

"""Returns a comma separated list of book titles each in quotes."""

return ", ".join([f'"{title}"' for title in self.book_titles])

- Use the prompt anywhere without worrying about internals such as formatting:

# script.py

from prompts import BookRecommendationPrompt

prompt = BookRecommendationPrompt(

book_titles=["The Name of the Wind", "The Lord of the Rings"]

)

print(str(prompt))

#> I've recently read the following books: "The Name of the Wind", "The Lord of the Rings".

# What should I read next?

print(prompt.messages)

#> [('user', 'I\'ve recently read the following books: "The Name of the Wind", "The Lord of the Rings".\nWhat should I read next?')]

Why use Mirascope?

- Intuitive: Editor support that you expect (e.g. autocompletion, inline errors)

- Peace of Mind: Pydantic together with our Prompt CLI eliminate prompt-related bugs.

- Durable: Seamlessly customize and extend functionality. Never maintain a fork.

- Integration: Easily integrate with JSON Schema and other tools such as FastAPI

- Convenience: Tooling that is clean, elegant, and delightful that you don't need to maintain.

- Open: Dedication to building open-source tools you can use with your choice of LLM.

Requirements

Pydantic is the only strict requirement, which will be included automatically during installation.

The Prompt CLI and LLM Convenience Wrappers have additional requirements, which you can opt-in to include if you're using those features.

Installation

Install Mirascope and start building with LLMs in minutes.

$ pip install mirascope

This will install the mirascope package along with pydantic.

To include extra dependencies, run:

$ pip install mirascope[cli] # Prompt CLI

$ pip install mirascope[openai] # LLM Convenience Wrappers

$ pip install mirascope[all] # All Extras

For those using zsh, you'll need to escape brackets:

$ pip install mirascope\[all\]

🚨 Warning: Strong Opinion 🚨

Prompt Engineering is engineering. Beyond basic illustrative examples, prompting quickly becomes complex. Separating prompts from the engineering workflow will only put limitations on what you can build with LLMs. We firmly believe that prompts are far more than "just f-strings" and thus require developer tools that are purpose-built for building these more complex prompts as easily as possible.

Examples

The Prompt class is an extension of BaseModel with some additional built-in convenience tooling for writing and formatting your prompts:

from mirascope import Prompt

class GreetingsPrompt(Prompt):

"""

Hello! It's nice to meet you. My name is {name}. How are you today?

"""

name: str

prompt = GreetingsPrompt(name="William Bakst")

print(GreetingsPrompt.template())

#> Hello! It's nice to meet you. My name is {name}. How are you today?

print(prompt)

#> Hello! It's nice to meet you. My name is William Bakst. How are you today?

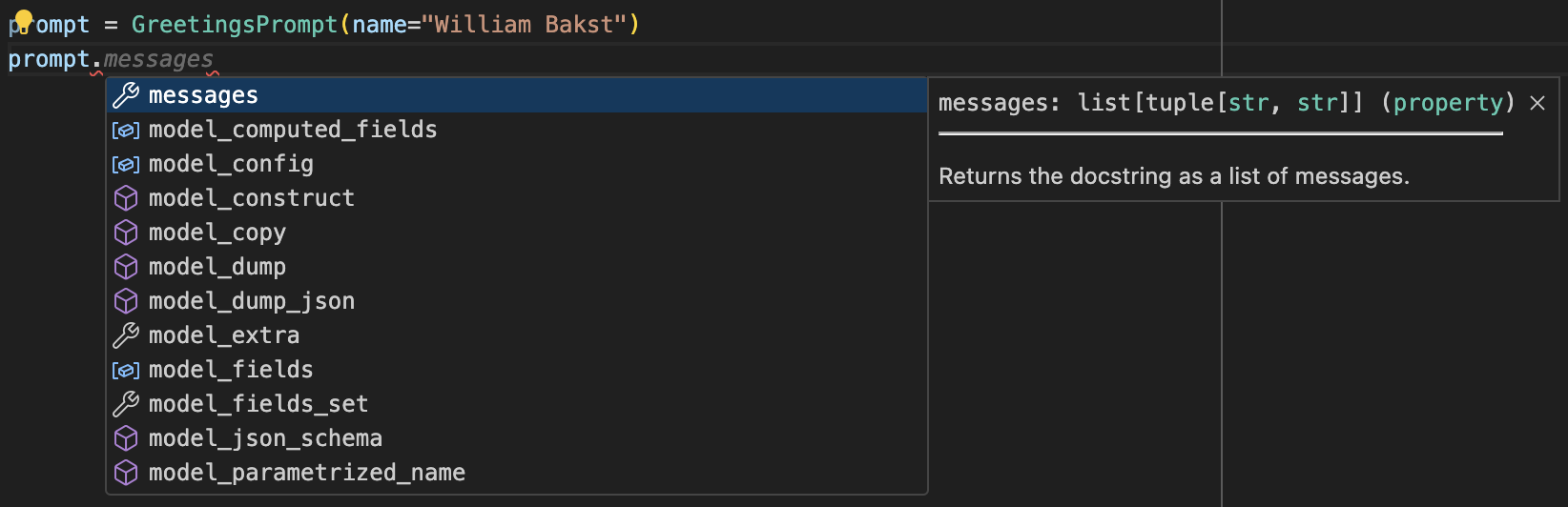

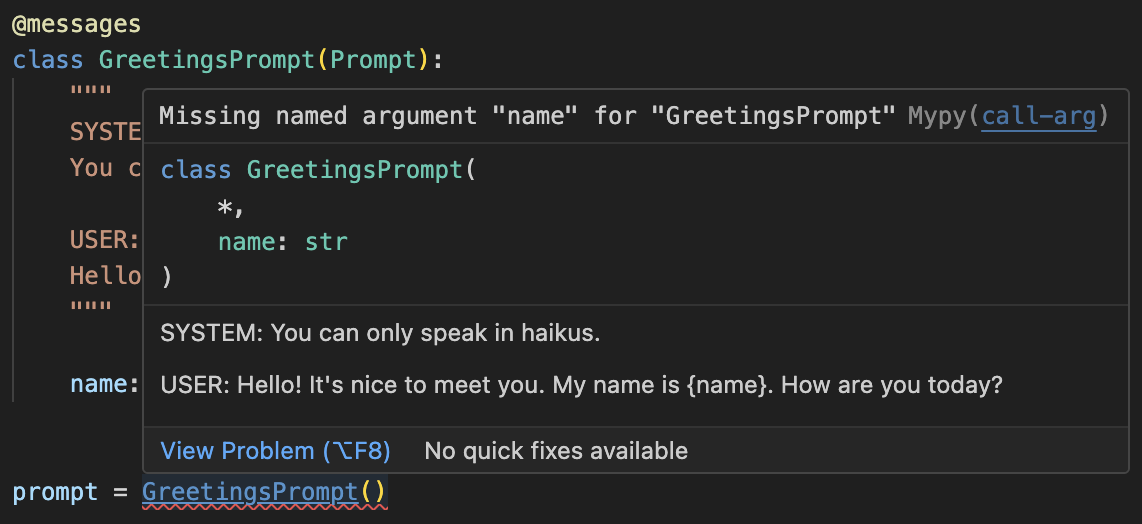

Example of autocomplete and inline errors:

- Autocomplete:

- Inline Errors:

You can access the docstring prompt template through the GreetingsPrompt.template() class method, which will automatically take care of removing any additional special characters such as newlines. This enables writing longer prompts that still adhere to the style of your codebase:

class GreetingsPrompt(Prompt):

"""

Salutations! It is a lovely day. Wouldn't you agree? I find that lovely days

such as these brighten up my mood quite a bit. I'm rambling...

My name is {name}. It's great to meet you.

"""

prompt = GreetingsPrompt(name="William Bakst")

print(prompt)

#> Salutations, good being! It is a lovely day. Wouldn't you agree? I find that lovely days such as these brighten up my mood quite a bit. I'm rambling...

#> My name is William Bakst. It's great to meet you.

The str method is written such that it only formats properties that are templated. This enables writing more complex properties that rely on one or more provided properties:

class GreetingsPrompt(Prompt):

"""

Hi! My name is {formatted_name}. {name_specific_remark}

What's your name? Is your name also {name_specific_question}?

"""

name: str

@property

def formatted_name(self) -> str:

"""Returns `name` with pizzazz."""

return f"⭐{self.name}⭐"

@property

def name_specific_question(self) -> str:

"""Returns a question based on `name`."""

if self.name == self.name[::-1]:

return "a palindrome"

else:

return "not a palindrome"

@property

def name_specific_remark(self) -> str:

"""Returns a remark based on `name`."""

return f"Can you believe my name is {self.name_specific_question}"

prompt = GreetingsPrompt(name="Bob")

print(prompt)

#> Hi! My name is ⭐Bob⭐. Can you believe my name is a palindrome?

#> What's your name? Is your name also a palindrome?

Notice that writing properties in this way ensures prompt-specific logic is tied directly to the prompt. It happens under the hood from the perspective of the person using GreetingsPrompt class. Constructing the prompt only requires name.

For writing promps with multiple messages with different roles, you can use the messages decorator to extend the functionality of the messages property:

from mirascope import Prompt, messages

@messages

class GreetingsPrompt(Prompt):

"""

SYSTEM:

You can only speak in haikus.

USER:

Hello! It's nice to meet you. My name is {name}. How are you today?

"""

name: str

prompt = GreetingsPrompt(name="William Bakst")

print(GreetingsPrompt.template())

#> SYSTEM: You can only speak in haikus.

#> USER: Hello! It's nice to meet you. My name is {name}. How are you today?

print(prompt)

#> SYSTEM: You can only speak in haikus.

#> USER: Hello! It's nice to meet you. My name is William Bakst. How are you today?

print(prompt.messages)

#> [('system', 'You can only speak in haikus.'), ('user', "Hello! It's nice to meet you. My name is William Bakst. How are you today?")]

The base Prompt class without the decorator will still have the messages attribute, but it will return a single user message in the list.

Remember: this is python

There's nothing stopping you from doing things however you'd like. For example, reclaim the docstring:

from mirascope import Prompt

TEMPLATE = """

This is now my prompt template for {topic}

"""

class NormalDocstringPrompt(Prompt):

"""This is now just a normal docstring."""

topic: str

def template(self) -> str:

"""Returns this prompt's template."""

return TEMPLATE

prompt = NormalDocstringPrompt(topic="prompts")

print(prompt)

#> This is now my prompt template for prompt

Since the `Prompt`'s `str` method uses template, the above will work as expected.

Because the Prompt class is built on top of BaseModel, prompts easily integrate with tools like FastAPI:

FastAPI Example

from fastapi import FastAPI

from mirascope import OpenAIChat

from prompts import GreetingsPrompt

app = FastAPI()

@app.post("/greetings")

def root(prompt: GreetingsPrompt) -> str:

"""Returns an AI generated greeting."""

model = OpenAIChat(api_key=os.environ["OPENAI_API_KEY"])

return str(model.create(prompt))

You can also use the Prompt class with whichever LLM you want to use:

Mistral Example

from mistralai.client import MistralClient

from mistralai.models.chat_completion import ChatMessage

from prompts import GreetingsPrompt

client = MistralClient(api_key=os.environ["MISTRAL_API_KEY"])

prompt = GreetingsPrompt(name="William Bakst")

messages = [

ChatMessage(role=role, content=content)

for role, content in prompt.messages

]

# No streaming

chat_response = client.chat(

model="mistral-tiny",

messages=messages,

)

OpenAI Example

from openai import OpenAI

from prompts import GreetingsPrompt

client = OpenAI(api_key=os.environ["OPENAI_API_KEY"])

prompt = GreetingsPrompt(name="William Bakst")

messages = [

{"role": role, "content": content}

for role, content in prompt.messages

]

completion = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=messages,

)

Dive Deeper

- Check out all of the possibilities of what you can do with Pydantic Prompts.

- Take a look at our Mirascope CLI for a semi-opinionated prompt mangement system.

- For convenience, we provide some wrappers around the OpenAI Python SDK for common tasks such as creation, streaming, tools, and extraction. We've found that the concepts covered in LLM Convenience Wrappers make building LLM-powered apps delightful.

- The API Reference contains full details on all classes, methods, functions, etc.

- You can take a look at code examples in the repo that demonstrate how to use the library effectively.

Contributing

Mirascope welcomes contributions from the community! See the contribution guide for more information on the development workflow. For bugs and feature requests, visit our GitHub Issues and check out our templates.

How To Help

Any and all help is greatly appreciated! Check out our page on how you can help.

Roadmap (What's on our mind)

- Better DX for Mirascope CLI (e.g. autocomplete)

- Functions as OpenAI tools

- Better chat history

- Testing for prompts

- Logging prompts and their responses

- Evaluating prompt quality

- RAG

- Agents

Versioning

Mirascope uses Semantic Versioning.

License

This project is licensed under the terms of the MIT License.

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Hashes for mirascope-0.2.2-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 00f2d59107a695e22ef132ad6890d3ee7f62b2fc226166b831bc1a634fb2de7d |

|

| MD5 | 905cb0dda9d53cd2f69c3d65b2f9f895 |

|

| BLAKE2b-256 | aa724a11d9bcb5617a02546e29f391f708bb0a346afc81ebc73caeab7fcba2bd |