SpKit: Signal Processing toolkit | Nikesh Bajaj |

Project description

Signal Processing toolkit

Links: Homepage | Documentation | Github | PyPi - project | _ Installation: pip install spkit

For more updated documentation check github or Documentation

Table of contents

New Updates

Version: 0.0.9.3

Following functionaliets are added in 0.0.9.3 version

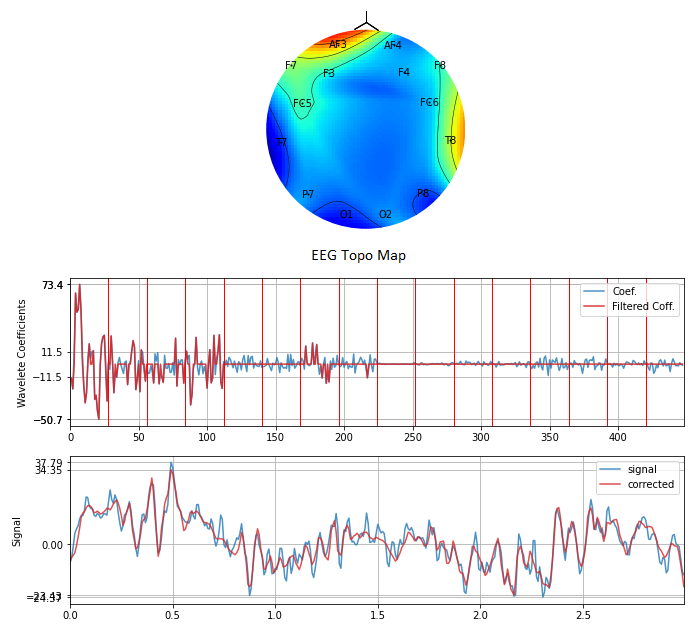

- ATAR Algorithm for EEG Artifact removal Automatic and Tunable Artifact Removal Algorithm for EEG from artical

- ICA based artifact removal algorith

- Basic filtering, wavelet filtering, EEG signal processing techniques

- spectral, sample, aproximate and svd entropy functions

Version: 0.0.9.2

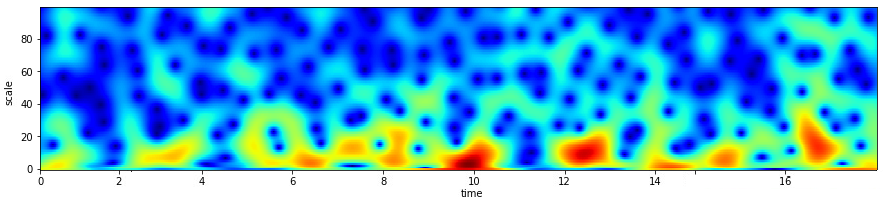

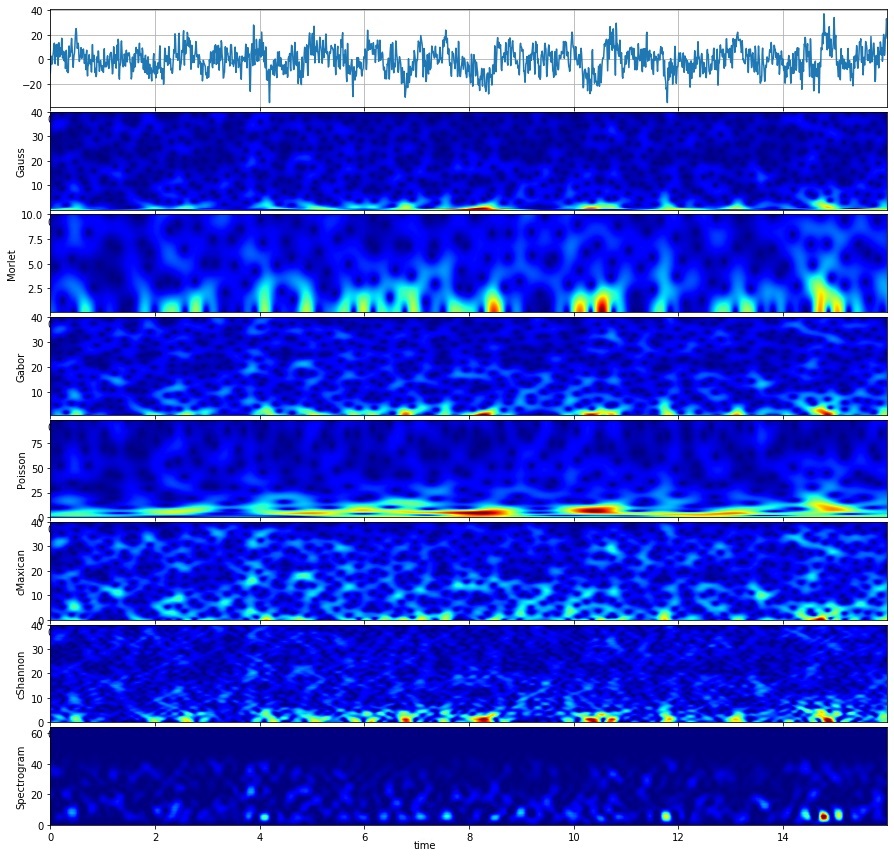

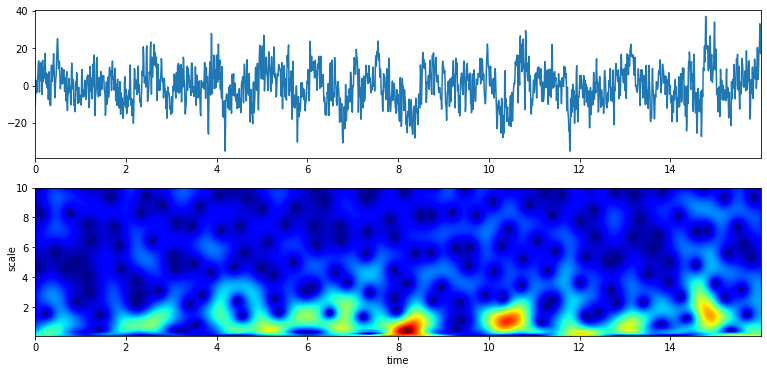

- Added Scalogram with CWT functions

Version: 0.0.9.1

- Fixed the Import Error with python 2.7

- Logistic Regression with multiclass

- Updated Examples with 0.0.9 version View Notebooks | Run all the examples with

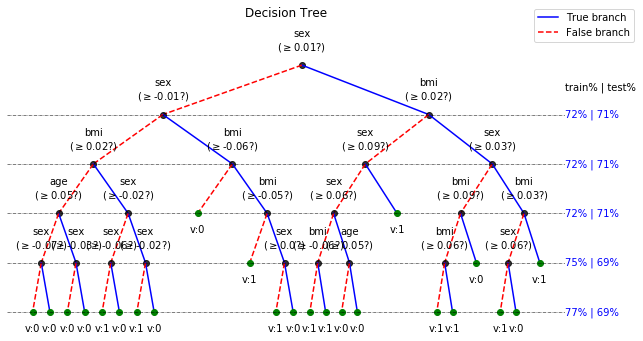

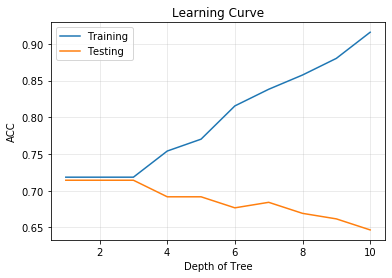

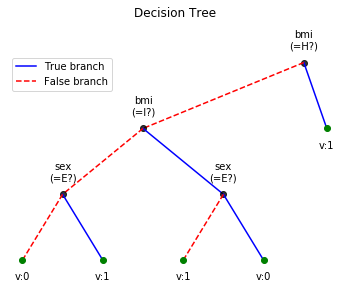

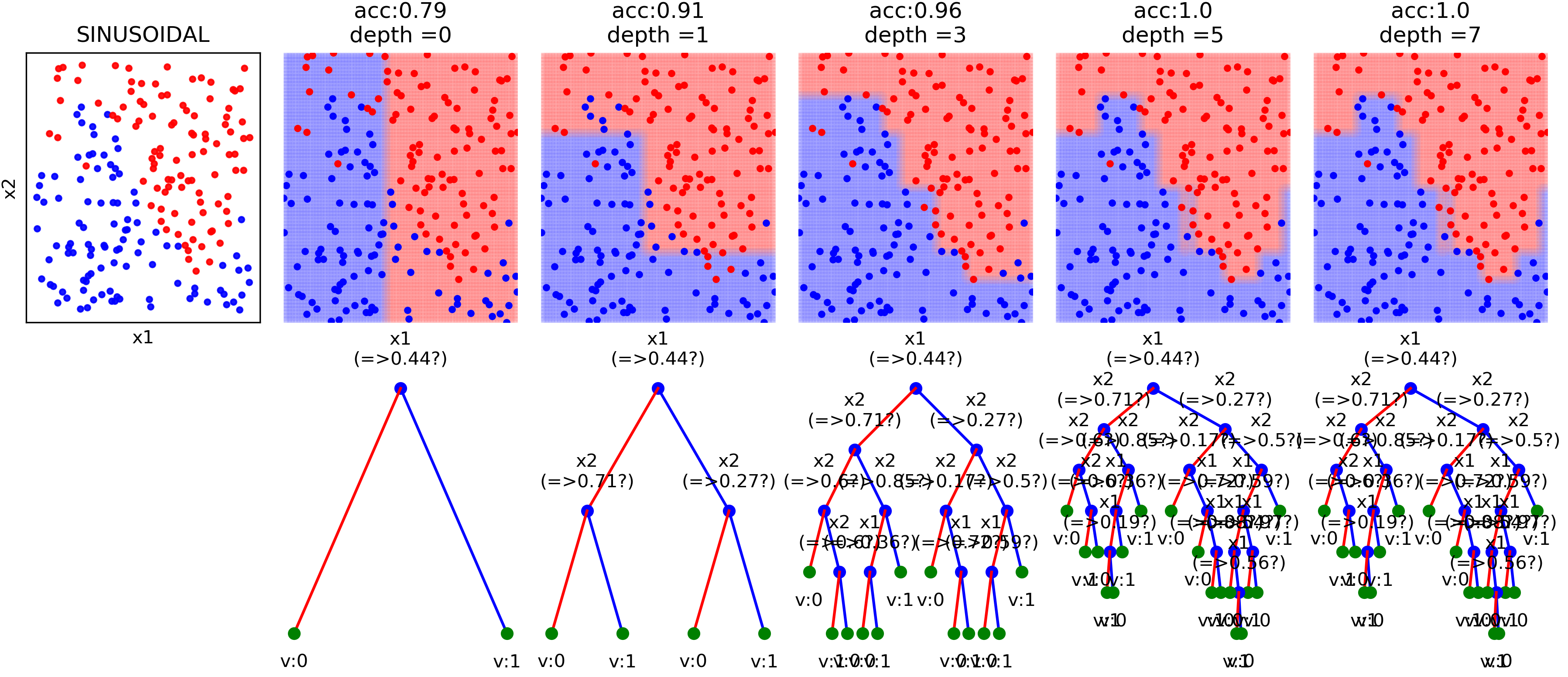

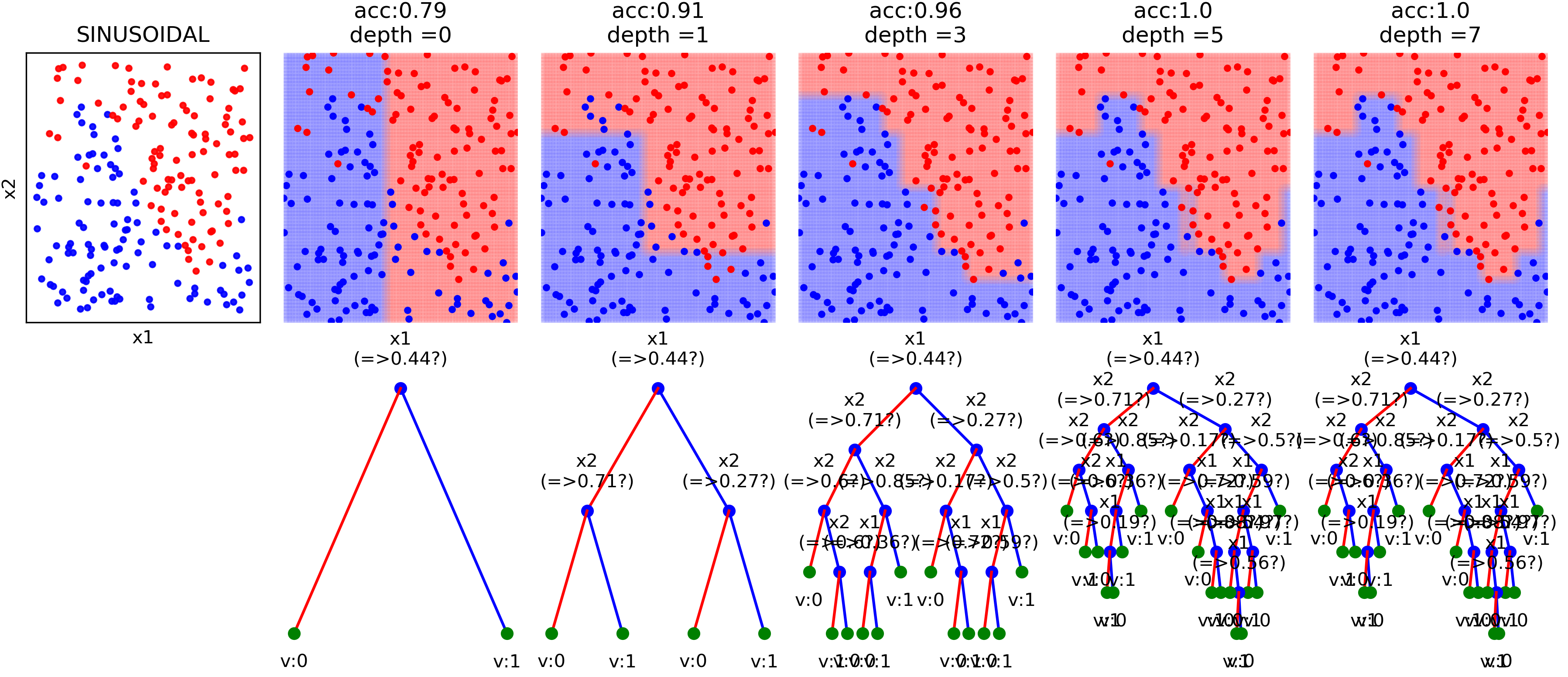

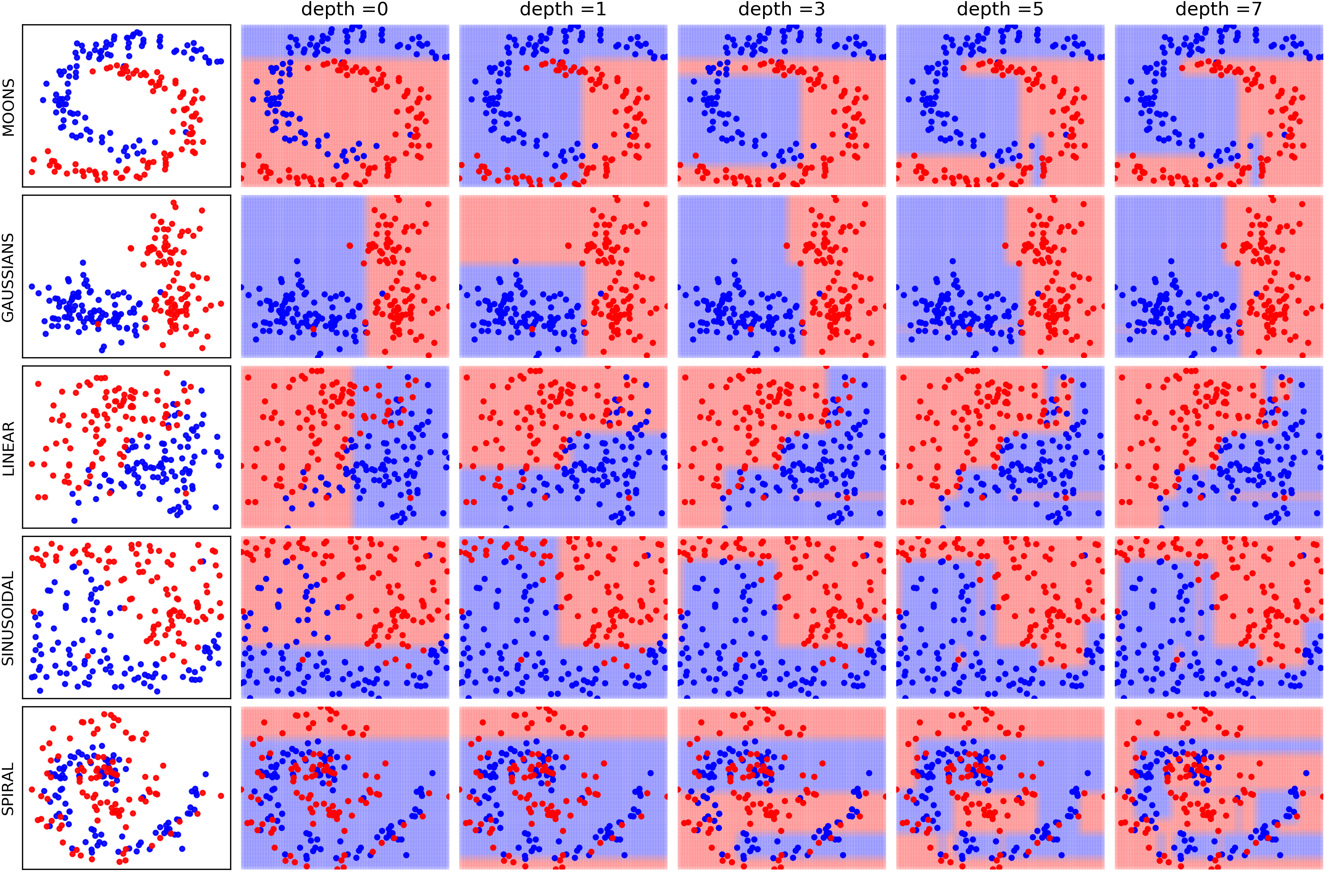

New Updates:: Decision Tree View Notebooks

Version: 0.0.7

- Analysing the performance measure of trained tree at different depth - with ONE-TIME Training ONLY

- Optimize the depth of tree

- Shrink the trained tree with optimal depth

- Plot the Learning Curve

- Classification: Compute the probability and counts of label at a leaf for given example sample

- Regression: Compute the standard deviation and number of training samples at a leaf for given example sample

- Version: 0.0.6: Works with catogorical features without converting them into binary vector

- Version: 0.0.5: Toy examples to understand the effect of incresing max_depth of Decision Tree

Installation

Requirement: numpy, matplotlib, scipy.stats, scikit-learn

with pip

pip install spkit

update with pip

pip install spkit --upgrade

Build from the source

Download the repository or clone it with git, after cd in directory build it from source with

python setup.py install

Functions list

Signal Processing Techniques

Information Theory functions

for real valued signals

- Entropy : Shannon entropy, Rényi entropy of order α, Collision entropy, Spectral Entropy, Approximate Entropy, Sample Entropy

- Joint entropy

- Conditional entropy

- Mutual Information

- Cross entropy

- Kullback–Leibler divergence

- Computation of optimal bin size for histogram using FD-rule

- Plot histogram with optimal bin size

Matrix Decomposition

- SVD

- ICA using InfoMax, Extended-InfoMax, FastICA & Picard

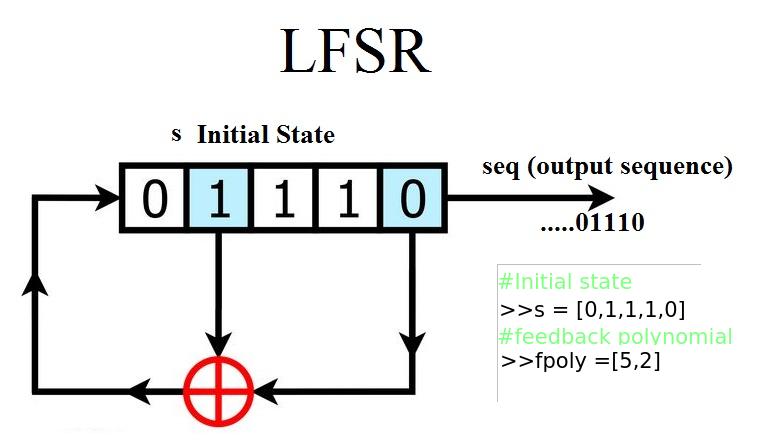

Linear Feedback Shift Register

- pylfsr

Continuase Wavelet Transform

- Gauss wavelet

- Morlet wavelet

- Gabor wavelet

- Poisson wavelet

- Maxican wavelet

- Shannon wavelet

Discrete Wavelet Transform

- Wavelet filtering

- Wavelet Packet Analysis and Filtering

Basic Filtering

- Removing DC/ Smoothing for multi-channel signals

- Bandpass/Lowpass/Highpass/Bandreject filtering for multi-channel signals

Biomedical Signal Processing

Artifact Removal Algorithm

- ATAR Algorithm Automatic and Tunable Artifact Removal Algorithm for EEG from artical

- ICA based Algorith

Machine Learning models - with visualizations

- Logistic Regression

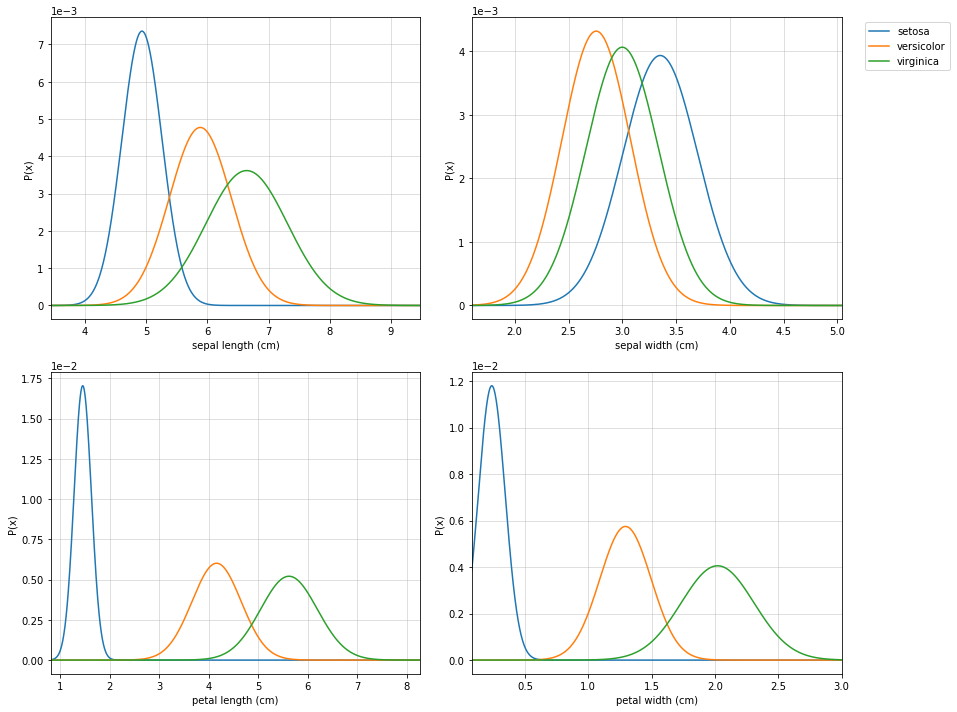

- Naive Bayes

- Decision Trees

- DeepNet (to be updated)

Examples

Scalogram CWT

import numpy as np

import matplotlib.pyplot as plt

import spkit as sp

from spkit.cwt import ScalogramCWT

from spkit.cwt import compare_cwt_example

x,fs = sp.load_data.eegSample_1ch()

t = np.arange(len(x))/fs

print(x.shape, t.shape)

compare_cwt_example(x,t,fs=fs)

f0 = np.linspace(0.1,10,100)

Q = np.linspace(0.1,5,100)

XW,S = ScalogramCWT(x,t,fs=fs,wType='Gauss',PlotPSD=True,f0=f0,Q=Q)

Information Theory

View in notebook

import numpy as np

import matplotlib.pyplot as plt

import spkit as sp

x = np.random.rand(10000)

y = np.random.randn(10000)

#Shannan entropy

H_x= sp.entropy(x,alpha=1)

H_y= sp.entropy(y,alpha=1)

#Rényi entropy

Hr_x= sp.entropy(x,alpha=2)

Hr_y= sp.entropy(y,alpha=2)

H_xy= sp.entropy_joint(x,y)

H_x1y= sp.entropy_cond(x,y)

H_y1x= sp.entropy_cond(y,x)

I_xy = sp.mutual_Info(x,y)

H_xy_cross= sp.entropy_cross(x,y)

D_xy= sp.entropy_kld(x,y)

print('Shannan entropy')

print('Entropy of x: H(x) = ',H_x)

print('Entropy of y: H(y) = ',H_y)

print('-')

print('Rényi entropy')

print('Entropy of x: H(x) = ',Hr_x)

print('Entropy of y: H(y) = ',Hr_y)

print('-')

print('Mutual Information I(x,y) = ',I_xy)

print('Joint Entropy H(x,y) = ',H_xy)

print('Conditional Entropy of : H(x|y) = ',H_x1y)

print('Conditional Entropy of : H(y|x) = ',H_y1x)

print('-')

print('Cross Entropy of : H(x,y) = :',H_xy_cross)

print('Kullback–Leibler divergence : Dkl(x,y) = :',D_xy)

plt.figure(figsize=(12,5))

plt.subplot(121)

sp.HistPlot(x,show=False)

plt.subplot(122)

sp.HistPlot(y,show=False)

plt.show()

Independent Component Analysis

View in notebook

from spkit import ICA

from spkit.data import load_data

X,ch_names = load_data.eegSample()

x = X[128*10:128*12,:]

t = np.arange(x.shape[0])/128.0

ica = ICA(n_components=14,method='fastica')

ica.fit(x.T)

s1 = ica.transform(x.T)

ica = ICA(n_components=14,method='infomax')

ica.fit(x.T)

s2 = ica.transform(x.T)

ica = ICA(n_components=14,method='picard')

ica.fit(x.T)

s3 = ica.transform(x.T)

ica = ICA(n_components=14,method='extended-infomax')

ica.fit(x.T)

s4 = ica.transform(x.T)

Machine Learning

Logistic Regression - View in notebook

Naive Bayes - View in notebook

Decision Trees - View in notebook

Plottng tree while training

Linear Feedback Shift Register

import numpy as np

from spkit.pylfsr import LFSR

## Example 1 ## 5 bit LFSR with x^5 + x^2 + 1

L = LFSR()

L.info()

L.next()

L.runKCycle(10)

L.runFullCycle()

L.info()

tempseq = L.runKCycle(10000) # generate 10000 bits from current state

Cite As

@software{nikesh_bajaj_2021_4710694,

author = {Nikesh Bajaj},

title = {Nikeshbajaj/spkit: 0.0.9.2},

month = apr,

year = 2021,

publisher = {Zenodo},

version = {0.0.9.2},

doi = {10.5281/zenodo.4710694},

url = {https://doi.org/10.5281/zenodo.4710694}

}

Contacts:

- Nikesh Bajaj

- http://nikeshbajaj.in

- n.bajaj[AT]qmul.ac.uk, n.bajaj[AT]imperial[dot]ac[dot]uk

PhD Student: Queen Mary University of London

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

spkit-0.0.9.3.tar.gz

(1.4 MB

view hashes)