tune-easy: A hyperparameter tuning tool, extremely easy to use.

Project description

tune-easy

A hyperparameter tuning tool, extremely easy to use.

This package supports scikit-learn API estimators, such as SVM and LightGBM.

Usage

Example of All-in-one Tuning

from tune_easy import AllInOneTuning

import seaborn as sns

# Load Dataset

iris = sns.load_dataset("iris")

iris = iris[iris['species'] != 'setosa'] # Select 2 classes

TARGET_VARIALBLE = 'species' # Target variable

USE_EXPLANATORY = ['petal_width', 'petal_length', 'sepal_width', 'sepal_length'] # Explanatory variables

y = iris[OBJECTIVE_VARIALBLE].values

X = iris[USE_EXPLANATORY].values

###### Run All-in-one Tuning######

all_tuner = AllInOneTuning()

all_tuner.all_in_one_tuning(X, y, x_colnames=USE_EXPLANATORY, cv=2)

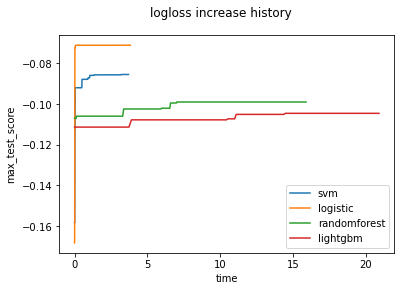

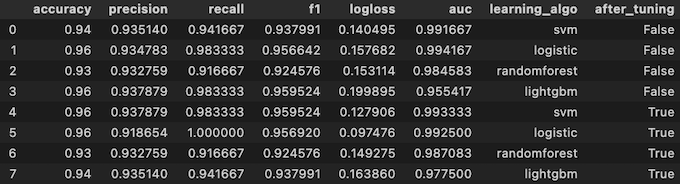

all_tuner.df_scores

If you want to know usage of the other classes, see API Reference and Examples

Example of Detailed Tuning

from tune_easy import LGBMClassifierTuning

from sklearn.datasets import load_boston

import seaborn as sns

# Load dataset

iris = sns.load_dataset("iris")

iris = iris[iris['species'] != 'setosa'] # Select 2 classes

OBJECTIVE_VARIALBLE = 'species' # Target variable

USE_EXPLANATORY = ['petal_width', 'petal_length', 'sepal_width', 'sepal_length'] # Explanatory variables

y = iris[OBJECTIVE_VARIALBLE].values

X = iris[USE_EXPLANATORY].values

###### Run Detailed Tuning######

tuning = LGBMClassifierTuning(X, y, USE_EXPLANATORY) # Initialize tuning instance

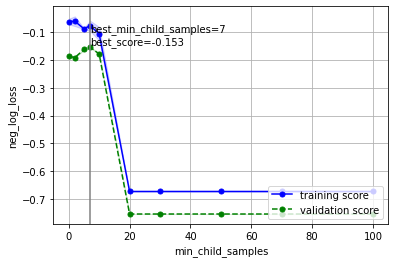

tuning.plot_first_validation_curve(cv=2) # Plot first validation curve

tuning.optuna_tuning(cv=2) # Optimization using Optuna library

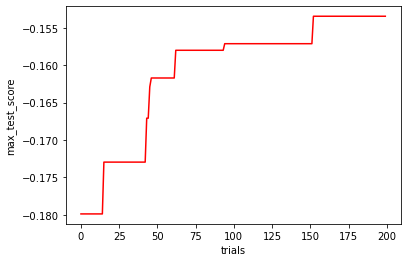

tuning.plot_search_history() # Plot score increase history

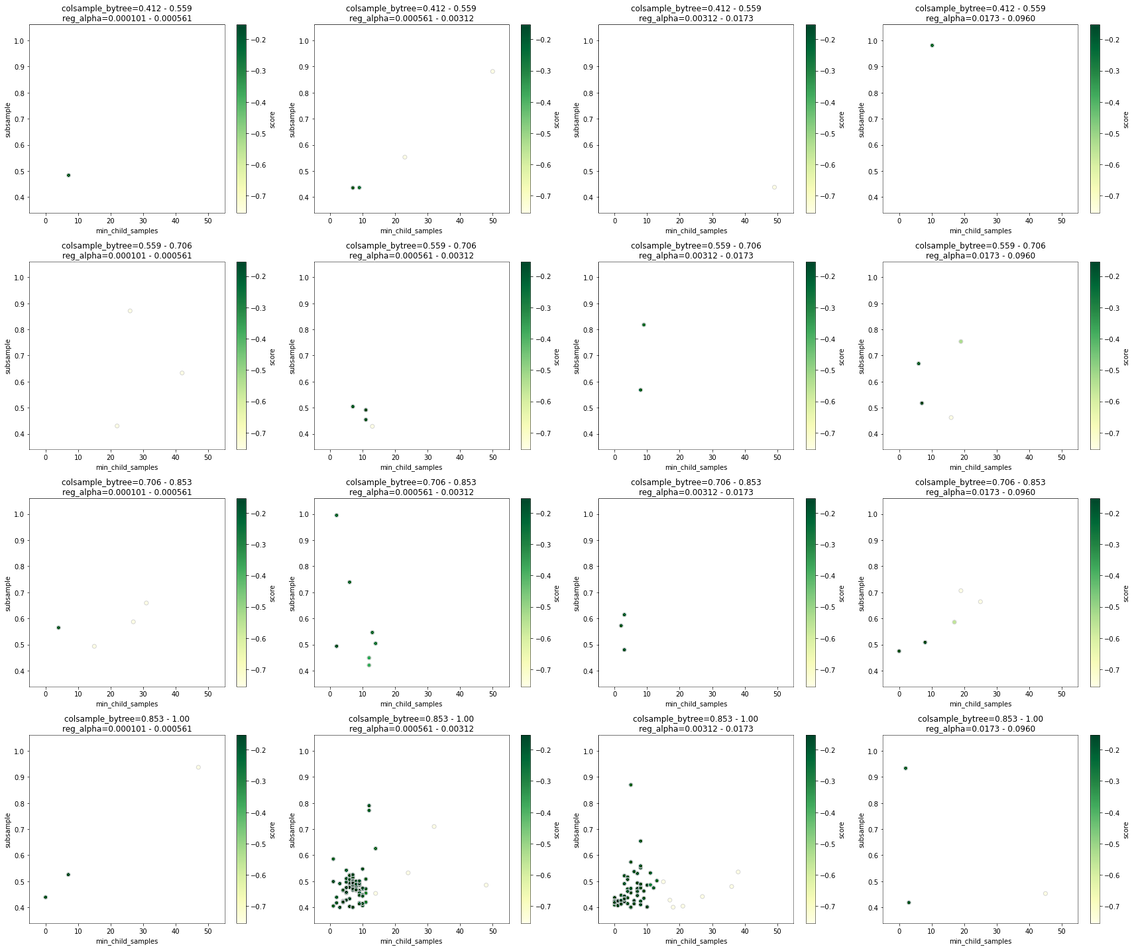

tuning.plot_search_map() # Visualize relationship between parameters and validation score

tuning.plot_best_learning_curve() # Plot learning curve

tuning.plot_best_validation_curve() # Plot validation curve

If you want to know usage of the other classes, see API Reference and Examples

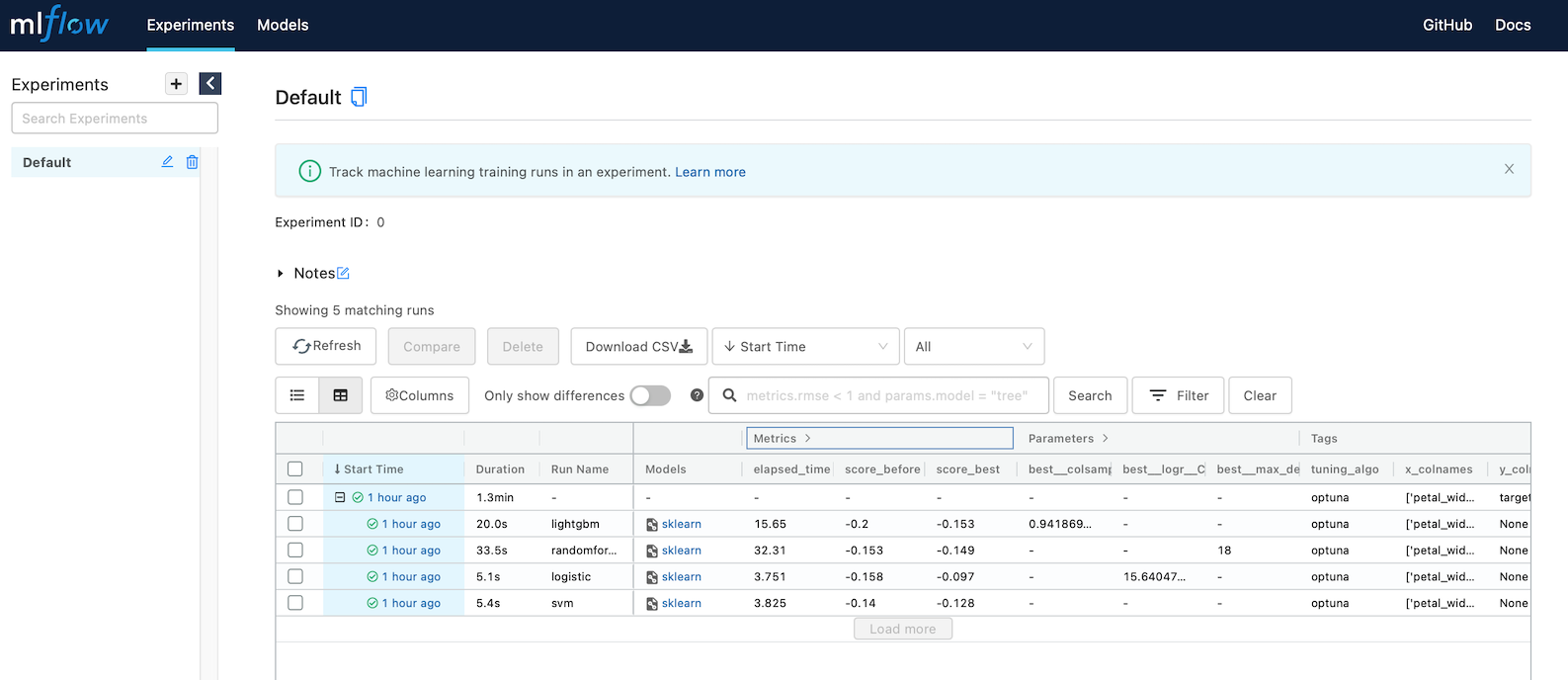

Example of MLflow logging

from tune_easy import AllInOneTuning

import seaborn as sns

# Load dataset

iris = sns.load_dataset("iris")

iris = iris[iris['species'] != 'setosa'] # Select 2 classes

TARGET_VARIALBLE = 'species' # Target variable

USE_EXPLANATORY = ['petal_width', 'petal_length', 'sepal_width', 'sepal_length'] # Explanatory variables

y = iris[TARGET_VARIALBLE].values

X = iris[USE_EXPLANATORY].values

###### Run All-in-one Tuning with MLflow logging ######

all_tuner = AllInOneTuning()

all_tuner.all_in_one_tuning(X, y, x_colnames=USE_EXPLANATORY, cv=2,

mlflow_logging=True) # Set MLflow logging argument

If you want to know usage of the other classes, see API Reference and Examples

Requirements

param-tuning-utility 0.2.1 requires

Python >=3.6

Scikit-learn >=0.24.2

Numpy >=1.20.3

Pandas >=1.2.4

Matplotlib >=3.3.4

Seaborn >=0.11.0

Optuna >=2.7.0

BayesianOptimization >=1.2.0

MLFlow >=1.17.0

LightGBM >=3.3.2

XGBoost >=1.4.2

seaborn-analyzer >=0.2.11

Installing tune-easy

Use pip to install the binary wheels on PyPI

$ pip install tune-easySupport

Bugs may be reported at https://github.com/c60evaporator/tune-easy/issues

Contact

If you have any questions or comments about param-tuning-utility, please feel free to contact me via eMail: c60evaporator@gmail.com or Twitter: https://twitter.com/c60evaporator This project is hosted at https://github.com/c60evaporator/param-tuning-utility

Project details

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

Hashes for tune_easy-0.2.1-py3-none-any.whl

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 616c44d4022f0be828aae9c38018e09e042a685ea9db3ec6abacb4b2d49f7a4e |

|

| MD5 | 47a6f68b06fac043732fb621727670f9 |

|

| BLAKE2b-256 | 94cf1ce8ee3504566bac3afb140d7fd8960acaa4887e3acd531982d920e1c098 |