pymars 0.10.0

pip install pymars

Released:

MARS: a tensor-based unified framework for large-scale data computation.

Navigation

Unverified details

These details have not been verified by PyPIProject links

Classifiers

- Operating System

- Programming Language

- Topic

Project description

Mars is a tensor-based unified framework for large-scale data computation which scales numpy, pandas, scikit-learn and many other libraries.

Installation

Mars is easy to install by

pip install pymarsInstallation for Developers

When you want to contribute code to Mars, you can follow the instructions below to install Mars for development:

git clone https://github.com/mars-project/mars.git

cd mars

pip install -e ".[dev]"More details about installing Mars can be found at installation section in Mars document.

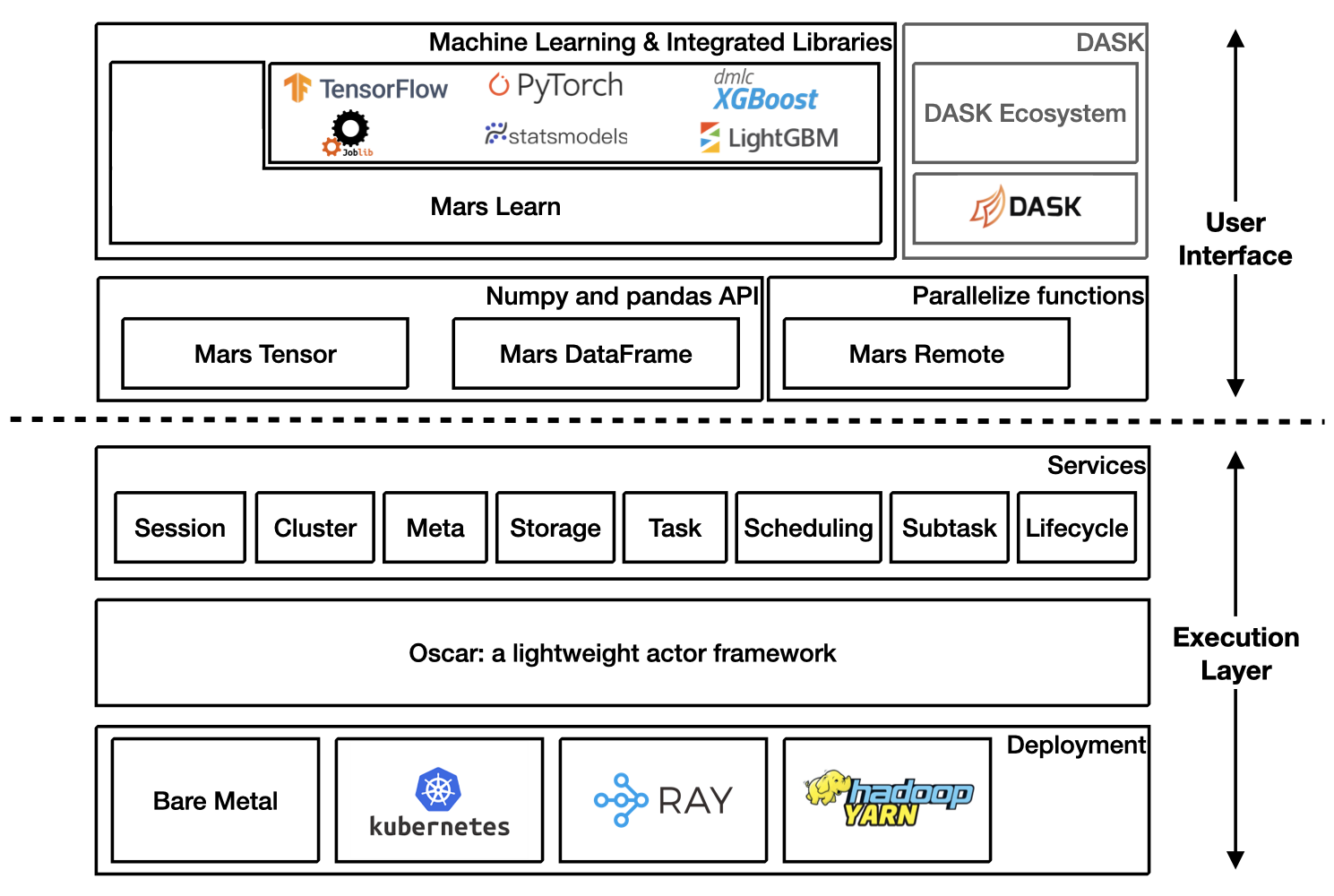

Architecture Overview

Getting Started

Starting a new runtime locally via:

>>> import mars

>>> mars.new_session()Or connecting to a Mars cluster which is already initialized.

>>> import mars

>>> mars.new_session('http://<web_ip>:<ui_port>')Mars Tensor

Mars tensor provides a familiar interface like Numpy.

Numpy |

Mars tensor |

|

|

|

|

Mars can leverage multiple cores, even on a laptop, and could be even faster for a distributed setting.

Mars DataFrame

Mars DataFrame provides a familiar interface like pandas.

Pandas |

Mars DataFrame |

|

|

|

|

Mars Learn

Mars learn provides a familiar interface like scikit-learn.

Scikit-learn |

Mars learn |

|

|

Mars learn also integrates with many libraries:

Mars remote

Mars remote allows users to execute functions in parallel.

Vanilla function calls |

Mars remote |

|

|

|

|

DASK on Mars

Refer to DASK on Mars for more information.

Eager Mode

Mars supports eager mode which makes it friendly for developing and easy to debug.

Users can enable the eager mode by options, set options at the beginning of the program or console session.

>>> from mars.config import options

>>> options.eager_mode = TrueOr use a context.

>>> from mars.config import option_context

>>> with option_context() as options:

>>> options.eager_mode = True

>>> # the eager mode is on only for the with statement

>>> ...If eager mode is on, tensor, DataFrame etc will be executed immediately by default session once it is created.

>>> import mars.tensor as mt

>>> import mars.dataframe as md

>>> from mars.config import options

>>> options.eager_mode = True

>>> t = mt.arange(6).reshape((2, 3))

>>> t

array([[0, 1, 2],

[3, 4, 5]])

>>> df = md.DataFrame(t)

>>> df.sum()

0 3

1 5

2 7

dtype: int64Mars on Ray

Mars also has deep integration with Ray and can run on Ray efficiently and interact with the large ecosystem of machine learning and distributed systems built on top of the core Ray.

Starting a new Mars on Ray runtime locally via:

import mars

mars.new_session(backend='ray')

# Perform computeInteract with Ray Dataset:

import mars.tensor as mt

import mars.dataframe as md

df = md.DataFrame(

mt.random.rand(1000_0000, 4),

columns=list('abcd'))

# Convert mars dataframe to ray dataset

ds = md.to_ray_dataset(df)

print(ds.schema(), ds.count())

ds.filter(lambda row: row["a"] > 0.5).show(5)

# Convert ray dataset to mars dataframe

df2 = md.read_ray_dataset(ds)

print(df2.head(5).execute())Refer to Mars on Ray for more information.

Easy to scale in and scale out

Mars can scale in to a single machine, and scale out to a cluster with thousands of machines. It’s fairly simple to migrate from a single machine to a cluster to process more data or gain a better performance.

Bare Metal Deployment

Mars is easy to scale out to a cluster by starting different components of mars distributed runtime on different machines in the cluster.

A node can be selected as supervisor which integrated a web service, leaving other nodes as workers. The supervisor can be started with the following command:

mars-supervisor -h <host_name> -p <supervisor_port> -w <web_port>Workers can be started with the following command:

mars-worker -h <host_name> -p <worker_port> -s <supervisor_endpoint>After all mars processes are started, users can run

>>> sess = new_session('http://<web_ip>:<ui_port>')

>>> # perform computationKubernetes Deployment

Refer to Run on Kubernetes for more information.

Yarn Deployment

Refer to Run on Yarn for more information.

Getting involved

Read development guide.

Join our Slack workgroup: Slack.

Join the mailing list: send an email to mars-dev@googlegroups.com.

Please report bugs by submitting a GitHub issue.

Submit contributions using pull requests.

Thank you in advance for your contributions!

Project details

Unverified details

These details have not been verified by PyPIProject links

Classifiers

- Operating System

- Programming Language

- Topic

Release history Release notifications | RSS feed

Download files

Download the file for your platform. If you're not sure which to choose, learn more about installing packages.

Source Distribution

Built Distributions

Uploaded

CPython 3.10

manylinux: glibc 2.17+ x86-64

Uploaded

CPython 3.10

manylinux: glibc 2.17+ ARM64

Uploaded

CPython 3.10

macOS 10.9+ x86-64

Uploaded

CPython 3.10

macOS 10.9+ universal2 (ARM64, x86-64)

Uploaded

CPython 3.9

manylinux: glibc 2.17+ ARM64

Uploaded

CPython 3.9

manylinux: glibc 2.5+ x86-64

Uploaded

CPython 3.9

macOS 10.9+ x86-64

Uploaded

CPython 3.9

macOS 10.9+ universal2 (ARM64, x86-64)

Uploaded

CPython 3.8

manylinux: glibc 2.17+ ARM64

Uploaded

CPython 3.8

manylinux: glibc 2.5+ x86-64

Uploaded

CPython 3.8

macOS 10.9+ x86-64

Uploaded

CPython 3.8

macOS 10.9+ universal2 (ARM64, x86-64)

Uploaded

CPython 3.7m

manylinux: glibc 2.17+ ARM64

Uploaded

CPython 3.7m

manylinux: glibc 2.5+ x86-64

Uploaded

CPython 3.7m

macOS 10.9+ x86-64

File details

Details for the file pymars-0.10.0.tar.gz.

File metadata

- Download URL: pymars-0.10.0.tar.gz

- Upload date:

- Size: 1.8 MB

- Tags: Source

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 8d77de3b49dd170d8a9bb2c5ca6ac5812a73673eed7559efdbb2259fd3958022 |

|

| MD5 | c543079d2662a877f13feea373178dff |

|

| BLAKE2b-256 | 574cfaa88cb69b48bf5859a7660cafdf14f8d2875cc6084c1d7d30068790d4a2 |

File details

Details for the file pymars-0.10.0-cp310-cp310-win_amd64.whl.

File metadata

- Download URL: pymars-0.10.0-cp310-cp310-win_amd64.whl

- Upload date:

- Size: 3.7 MB

- Tags: CPython 3.10, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.13

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | f0d68b2b3ffa9402c8411c18144b3067cfe0dd190a885f519816b712a8085c9e |

|

| MD5 | 0d4fc2e71152b4b4e803634762c6b504 |

|

| BLAKE2b-256 | 8aad6a8668a2616b33844feb41b148c8e04dfb0061b258d56b60535b90f1061e |

File details

Details for the file pymars-0.10.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl.

File metadata

- Download URL: pymars-0.10.0-cp310-cp310-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

- Upload date:

- Size: 9.6 MB

- Tags: CPython 3.10, manylinux: glibc 2.17+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 73ddd897ff68de732d42b630ead0329229754bcf396b931b3e9c3f76678062c0 |

|

| MD5 | 922163b8abfb9130d84f213937c342f9 |

|

| BLAKE2b-256 | 54d85077b699b594f75ac7c0499dbf370c9347dba6714da106d6111750921d9a |

File details

Details for the file pymars-0.10.0-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl.

File metadata

- Download URL: pymars-0.10.0-cp310-cp310-manylinux_2_17_aarch64.manylinux2014_aarch64.whl

- Upload date:

- Size: 9.6 MB

- Tags: CPython 3.10, manylinux: glibc 2.17+ ARM64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 3fa21b672653fe187e137e5b257103772daedc0467d1c17be5e30bf1f550be27 |

|

| MD5 | c0f5e73f95757e07dfeae1069215e2d4 |

|

| BLAKE2b-256 | b2931d01d0c6eccd4b48abc6ab8d05c019097219ffc791a1b2f46a0589e0e4b6 |

File details

Details for the file pymars-0.10.0-cp310-cp310-macosx_10_9_x86_64.whl.

File metadata

- Download URL: pymars-0.10.0-cp310-cp310-macosx_10_9_x86_64.whl

- Upload date:

- Size: 3.9 MB

- Tags: CPython 3.10, macOS 10.9+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.15.0 pkginfo/1.8.3 requests/2.27.1 setuptools/41.2.0 requests-toolbelt/0.10.1 tqdm/4.64.1 CPython/2.7.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 4a7e96c8df22213614455e655cea47d1223f1967a6acb5eb2f53e54b1929e2eb |

|

| MD5 | c5a73ef8da67ddb2281bc7b2dccbc1e0 |

|

| BLAKE2b-256 | fd1135c86d7925ac97aa0e0ba94d05a3b43e32527f6e823be54a9dc65e930530 |

File details

Details for the file pymars-0.10.0-cp310-cp310-macosx_10_9_universal2.whl.

File metadata

- Download URL: pymars-0.10.0-cp310-cp310-macosx_10_9_universal2.whl

- Upload date:

- Size: 5.0 MB

- Tags: CPython 3.10, macOS 10.9+ universal2 (ARM64, x86-64)

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.15.0 pkginfo/1.8.3 requests/2.27.1 setuptools/41.2.0 requests-toolbelt/0.10.1 tqdm/4.64.1 CPython/2.7.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 017f8fffbc33a1323d20e1c975a479789bfe08970e532d7d2ab49f83d90c1542 |

|

| MD5 | 72876cb4557671a5ed9e1586dce5e462 |

|

| BLAKE2b-256 | de9a75ae487ac3a4b9188d71df9fc7abc27ebc4a0c596b2c354fe21008506a15 |

File details

Details for the file pymars-0.10.0-cp39-cp39-win_amd64.whl.

File metadata

- Download URL: pymars-0.10.0-cp39-cp39-win_amd64.whl

- Upload date:

- Size: 3.7 MB

- Tags: CPython 3.9, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.13

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 7a97524f19b6f864f76818a1d2930fd86b56d949a2be736206d9ad94563063f7 |

|

| MD5 | 784ed11a6d6b12f97688f3e0f1f2585e |

|

| BLAKE2b-256 | 7eece3f5a4006b0d8b4b7c2a0998e7d62e4286e7269fc0db78e23a78b4d97361 |

File details

Details for the file pymars-0.10.0-cp39-cp39-win32.whl.

File metadata

- Download URL: pymars-0.10.0-cp39-cp39-win32.whl

- Upload date:

- Size: 3.5 MB

- Tags: CPython 3.9, Windows x86

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.13

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 61068c9297d6ecca717b4eda29a22ac2f98b2c1d010b5f8e3f0e45a0dd41e8af |

|

| MD5 | c16bbb6af44613daaeec1c40bf217716 |

|

| BLAKE2b-256 | 50941392b9082bc880938a3121e956033a91dfe9643d3db001b3f0763ceab544 |

File details

Details for the file pymars-0.10.0-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl.

File metadata

- Download URL: pymars-0.10.0-cp39-cp39-manylinux_2_17_aarch64.manylinux2014_aarch64.whl

- Upload date:

- Size: 9.7 MB

- Tags: CPython 3.9, manylinux: glibc 2.17+ ARM64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 4f95439dd3efbdab6861c7ccc384f2707258fb96eb65c22514613c4ae536732f |

|

| MD5 | 6edaf2b6802419ddb1131bc81a450642 |

|

| BLAKE2b-256 | 2b9876a51535e5a5b591f7e6d1bfbbcf7103d319c1654b5c416fef32d5dfaac4 |

File details

Details for the file pymars-0.10.0-cp39-cp39-manylinux_2_5_x86_64.manylinux1_x86_64.whl.

File metadata

- Download URL: pymars-0.10.0-cp39-cp39-manylinux_2_5_x86_64.manylinux1_x86_64.whl

- Upload date:

- Size: 7.7 MB

- Tags: CPython 3.9, manylinux: glibc 2.5+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 8f87a682de82eb0f073e865aadf685238d857c0413e276c3689fca081847ff30 |

|

| MD5 | 16b780cfbdfe57e5dbecb6590d4a222a |

|

| BLAKE2b-256 | c455d0e364288ca113ca65bd99ed50298543dd5e238bcb97b843857eb1325edc |

File details

Details for the file pymars-0.10.0-cp39-cp39-macosx_10_9_x86_64.whl.

File metadata

- Download URL: pymars-0.10.0-cp39-cp39-macosx_10_9_x86_64.whl

- Upload date:

- Size: 3.9 MB

- Tags: CPython 3.9, macOS 10.9+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.15.0 pkginfo/1.8.3 requests/2.27.1 setuptools/41.2.0 requests-toolbelt/0.10.1 tqdm/4.64.1 CPython/2.7.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | c786871aa5e827e436186b80f616a75e4e98b058b2fe50be224ba4e5506d94c8 |

|

| MD5 | 6f01270da0f2beebf82bbb42de74131a |

|

| BLAKE2b-256 | 11a9f4ac6f764cffacffabd9b53a53666b7d991ceae9beb081a5f02ce3fbe0ec |

File details

Details for the file pymars-0.10.0-cp39-cp39-macosx_10_9_universal2.whl.

File metadata

- Download URL: pymars-0.10.0-cp39-cp39-macosx_10_9_universal2.whl

- Upload date:

- Size: 5.0 MB

- Tags: CPython 3.9, macOS 10.9+ universal2 (ARM64, x86-64)

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.15.0 pkginfo/1.8.3 requests/2.27.1 setuptools/41.2.0 requests-toolbelt/0.10.1 tqdm/4.64.1 CPython/2.7.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 1c871284f36217566ebdb7fa47bc64deafb5a2fbcfdb98a868b06922ca214024 |

|

| MD5 | 944ca94514cc4fb98656ce14173ed64d |

|

| BLAKE2b-256 | 8a1c4e01c0481a0de4f81c665c5eebada52748dbd597fdf431e54ec548c6bddf |

File details

Details for the file pymars-0.10.0-cp38-cp38-win_amd64.whl.

File metadata

- Download URL: pymars-0.10.0-cp38-cp38-win_amd64.whl

- Upload date:

- Size: 3.7 MB

- Tags: CPython 3.8, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.13

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 6f9d7f1ae43e95f04018dcbf5901877105325715c40125715b00379f6b7aac55 |

|

| MD5 | edda091f675baebe3b9ca8f3849549cc |

|

| BLAKE2b-256 | 31a082fc77ea1b613910301f2f45a019d8868dc2251fa6730d67ead5132e4946 |

File details

Details for the file pymars-0.10.0-cp38-cp38-win32.whl.

File metadata

- Download URL: pymars-0.10.0-cp38-cp38-win32.whl

- Upload date:

- Size: 3.5 MB

- Tags: CPython 3.8, Windows x86

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.13

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | d7bba5df19403eac9cb6570ddda4527bd9a19086dbcd811708b3cd876ab37421 |

|

| MD5 | 070c08cc0e37dd00a7e887fc42240617 |

|

| BLAKE2b-256 | 3725ab82e12e7e726868eb43556ef351fb6da77d19e8707f0496c73dbdfff2c5 |

File details

Details for the file pymars-0.10.0-cp38-cp38-manylinux_2_17_aarch64.manylinux2014_aarch64.whl.

File metadata

- Download URL: pymars-0.10.0-cp38-cp38-manylinux_2_17_aarch64.manylinux2014_aarch64.whl

- Upload date:

- Size: 9.8 MB

- Tags: CPython 3.8, manylinux: glibc 2.17+ ARM64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 00457464432670a76e0691242f58ee6d7287bcb9051556565d222534a41860e1 |

|

| MD5 | 55750f0d7dca3cfe6a1ba4e46c04e663 |

|

| BLAKE2b-256 | dd52052cf2fda21eac83c8706786d934b7b25166506ba6f4880fa0db89e8f281 |

File details

Details for the file pymars-0.10.0-cp38-cp38-manylinux_2_5_x86_64.manylinux1_x86_64.whl.

File metadata

- Download URL: pymars-0.10.0-cp38-cp38-manylinux_2_5_x86_64.manylinux1_x86_64.whl

- Upload date:

- Size: 8.0 MB

- Tags: CPython 3.8, manylinux: glibc 2.5+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 13fb46ae5c9a4321a8031fcf9488178cf64835e7f53ce7529caa1abba5ef9395 |

|

| MD5 | 1204341b2dad4e11d13f4c1911d298be |

|

| BLAKE2b-256 | 68e7a67babc1903de1097d53bd90509da5a592ded714b9e76193503e2855f551 |

File details

Details for the file pymars-0.10.0-cp38-cp38-macosx_10_9_x86_64.whl.

File metadata

- Download URL: pymars-0.10.0-cp38-cp38-macosx_10_9_x86_64.whl

- Upload date:

- Size: 3.9 MB

- Tags: CPython 3.8, macOS 10.9+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.15.0 pkginfo/1.8.3 requests/2.27.1 setuptools/41.2.0 requests-toolbelt/0.10.1 tqdm/4.64.1 CPython/2.7.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | c1493f7042247fbf2b08f87d56e8d665f7048f104380018ee7e9f6885295fec0 |

|

| MD5 | 349b343a76d1f598e0bb4ff1763335a8 |

|

| BLAKE2b-256 | 5b52ee1e3dceb66f50940b6b11379da7ab25a09bd7f75cba6fab8af18da2ce30 |

File details

Details for the file pymars-0.10.0-cp38-cp38-macosx_10_9_universal2.whl.

File metadata

- Download URL: pymars-0.10.0-cp38-cp38-macosx_10_9_universal2.whl

- Upload date:

- Size: 4.6 MB

- Tags: CPython 3.8, macOS 10.9+ universal2 (ARM64, x86-64)

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.15.0 pkginfo/1.8.3 requests/2.27.1 setuptools/41.2.0 requests-toolbelt/0.10.1 tqdm/4.64.1 CPython/2.7.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 76eab5d8009b53fec9178b6c7cb59ffad33900ef89e6eaf3efac8187a0b651a5 |

|

| MD5 | 4da3d8e5f81c5342cb8b68a6eeed59ed |

|

| BLAKE2b-256 | a8dc61e73b963b50ac27fa403df6bea3ff738a7cfc673ea292f4c39f4dddaefe |

File details

Details for the file pymars-0.10.0-cp37-cp37m-win_amd64.whl.

File metadata

- Download URL: pymars-0.10.0-cp37-cp37m-win_amd64.whl

- Upload date:

- Size: 3.7 MB

- Tags: CPython 3.7m, Windows x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.13

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 5254d314a46e30028a2dbf4a1de789a60b94678d5d99e8f9f9fade99ec74e700 |

|

| MD5 | 7c30bc51a9f655849d0f65f092493aa6 |

|

| BLAKE2b-256 | 91365a117d3bf7d167e98de7f3a8ae072b3aef9f6975ce5da1ebcd9311e6116c |

File details

Details for the file pymars-0.10.0-cp37-cp37m-win32.whl.

File metadata

- Download URL: pymars-0.10.0-cp37-cp37m-win32.whl

- Upload date:

- Size: 3.2 MB

- Tags: CPython 3.7m, Windows x86

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.9.13

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 94eca2a9cabd8a3c00253bf0ad06815b466ac7230bcac88b985dc1242d3163af |

|

| MD5 | efb52d598ae4813c8a7dfaa931e12a33 |

|

| BLAKE2b-256 | 035caf336940066030461a865416390a63289b7faf635404e4b3ef368684f3f0 |

File details

Details for the file pymars-0.10.0-cp37-cp37m-manylinux_2_17_aarch64.manylinux2014_aarch64.whl.

File metadata

- Download URL: pymars-0.10.0-cp37-cp37m-manylinux_2_17_aarch64.manylinux2014_aarch64.whl

- Upload date:

- Size: 9.0 MB

- Tags: CPython 3.7m, manylinux: glibc 2.17+ ARM64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 9e2714d19f58160165e0d6b767e07f3195e375d3543037587712f34a4cc64b17 |

|

| MD5 | a7fca46290fe15077d4e8c9b6b7c06b4 |

|

| BLAKE2b-256 | 579dc0762e783b98ac499525891cdf753495e8e5f21458008e849dd168764890 |

File details

Details for the file pymars-0.10.0-cp37-cp37m-manylinux_2_5_x86_64.manylinux1_x86_64.whl.

File metadata

- Download URL: pymars-0.10.0-cp37-cp37m-manylinux_2_5_x86_64.manylinux1_x86_64.whl

- Upload date:

- Size: 7.8 MB

- Tags: CPython 3.7m, manylinux: glibc 2.5+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/4.0.2 CPython/3.10.6

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 7f338fe04998b352a4550bd419ed4c26888b6f31e08fe493fd72db1d8fa6eda6 |

|

| MD5 | b9100501a75380b2af295222e01279f7 |

|

| BLAKE2b-256 | ca637061aa870183e5c6638f080ad60cb70af6d917f7b0b4014a709564e0a858 |

File details

Details for the file pymars-0.10.0-cp37-cp37m-macosx_10_9_x86_64.whl.

File metadata

- Download URL: pymars-0.10.0-cp37-cp37m-macosx_10_9_x86_64.whl

- Upload date:

- Size: 3.5 MB

- Tags: CPython 3.7m, macOS 10.9+ x86-64

- Uploaded using Trusted Publishing? No

- Uploaded via: twine/1.15.0 pkginfo/1.8.3 requests/2.27.1 setuptools/41.2.0 requests-toolbelt/0.10.1 tqdm/4.64.1 CPython/2.7.18

File hashes

| Algorithm | Hash digest | |

|---|---|---|

| SHA256 | 0ca3b970a70e9b10ece343eed1d1950e40d666e1be9c594096be2456b8cf32c5 |

|

| MD5 | 573aba7959a0318d6e66d7fd373286d2 |

|

| BLAKE2b-256 | 82a8d1a4d9435581e321ecb8a15540320ee849975b6b47ffe130717060120a93 |